ViT Attention, Infinite LLMs, and Age-Warping Friends with AI

This week's advancements in AI: focus, flow, and fun

This post was originally published on Substack and was sent to our readers by email. You should subscribe!

Subscribe or follow me on Twitter for more content like this!

Hi friends,

First, I’d like to welcome all our new readers - 28% of you have joined in the last 4 days! If you’re new, I’d love to hear what you’re working on and how I can help.

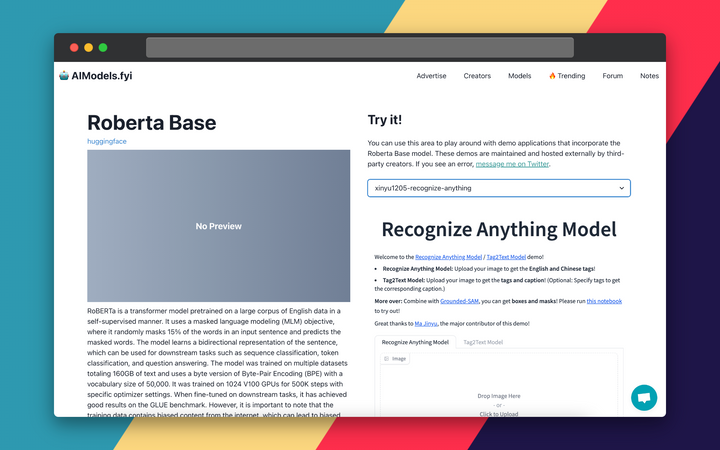

Now, on to business. First, let’s look at some of the most interesting AI models on the site right now. My top picks this week:

- 🏥 MedNER-CR-JA - MedNER-CR-JA is a model for named entity recognition (NER) of Japanese medical documents. It is designed to identify and classify specific entities such as diseases, symptoms, treatments, and anatomical terms in the text.

- 🖼 JoJoGAN - JoJoGAN is a deep learning model that is designed for one-shot face stylization. It takes an input image of a person's face and generates a stylized version of the face based on a given reference image. Check out the guide for more!

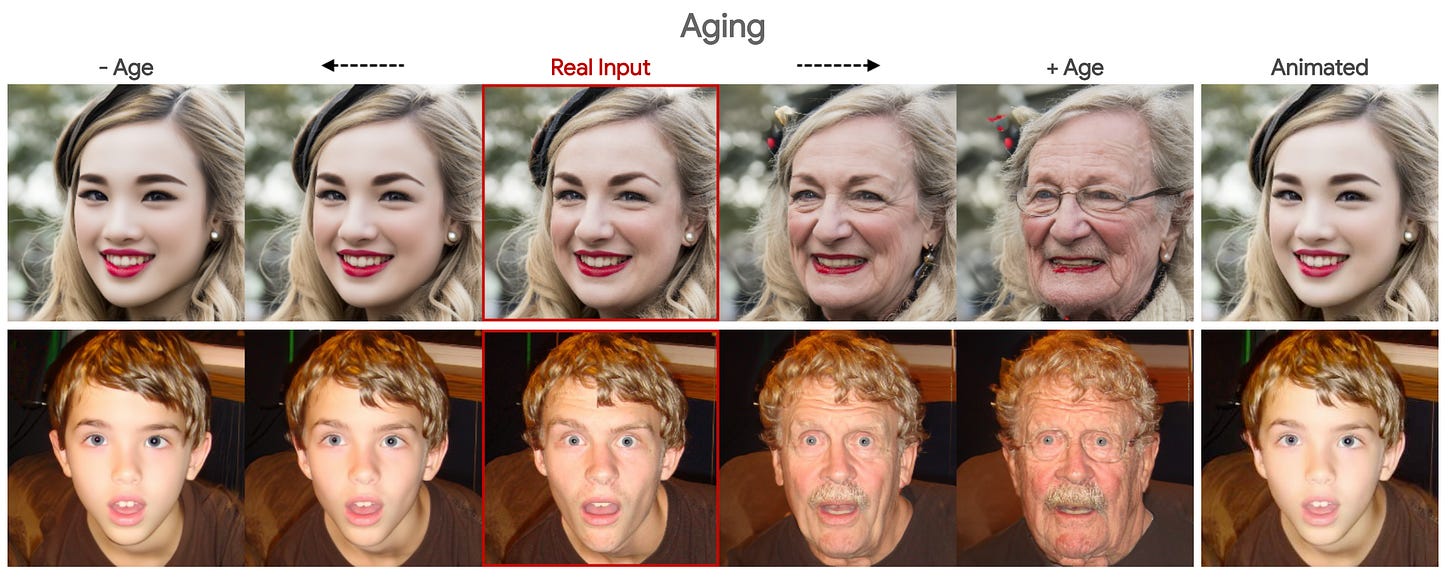

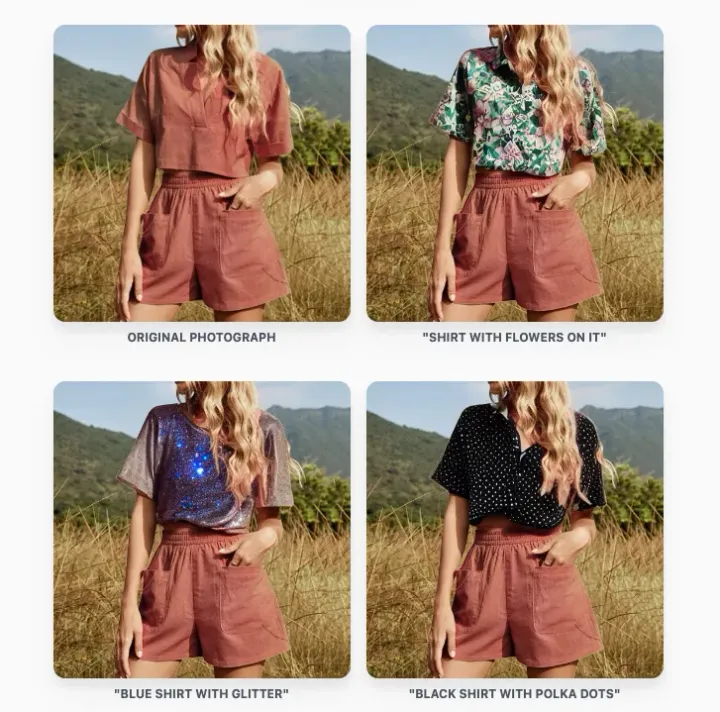

- 🥸 DiffAE - Diffusion Autoencoders (DiffAE) is a model that is used for image manipulation. It leverages the power of autoencoder architectures and combines it with a diffusion process to generate high-quality and realistic image modifications. Check out my guide to using DiffAE to make your friends look bald, happy, young, blond, old - you name it!

There have also been some crazy AI research findings. They’re part of Plain English Papers, a new format for me. Basically, I summarize technical papers in plain English. Here’s my picks for this week:

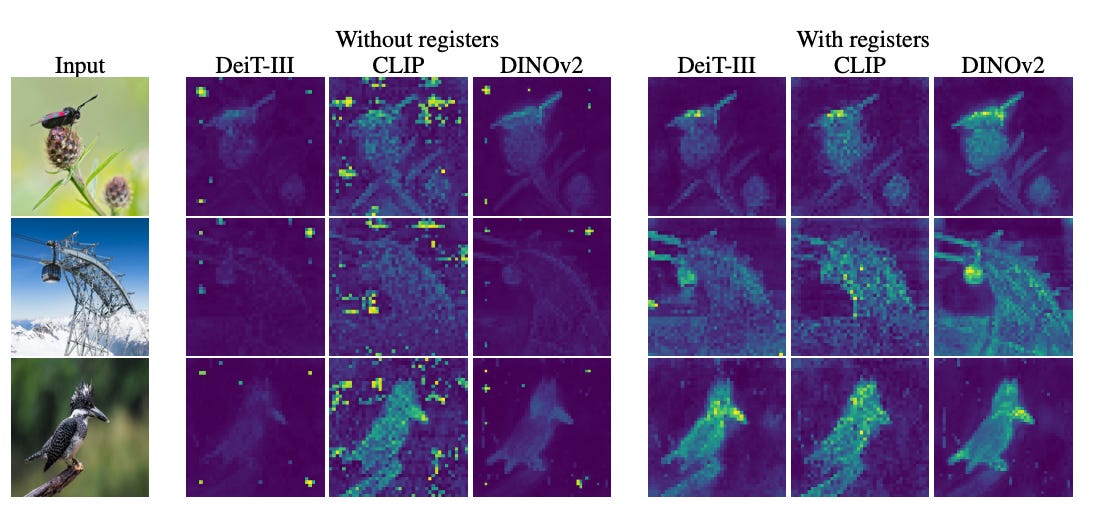

- 😎 Researchers discover explicit registers eliminate vision transformer attention spikes - When visualizing the inner workings of vision transformers (ViTs), researchers noticed weird spikes of attention on random background patches. Here's how they fixed them. (Mike’s pick for best read! 💯)

- ♾ LLMs can be extended to infinite sequence lengths without fine-tuning - StreamingLLM is an efficient framework that enables LLMs trained with a finite length attention window to generalize to infinite sequence length without any fine-tuning. Here’s how it works.

- 🎥 UNC Researchers Present VideoDirectorGPT: Using AI to Generate Multi-Scene Videos from Text - The key innovation proposed is decomposing multi-scene video generation into two steps: a director step and a "film" step. I talk a bit about this on Twitter here.

Those first two papers have some exciting parallels around registers and explicit memory. Does anyone have any insight on that? I’d like to cover it in my next post.

Two asks to wrap this one up:

- AIModels.fyi is free, so I’m looking for a sponsor. Click here to book a slot and get your product in front of thousands of AI devs and founders!

- If you’re new: I’d love to hear more about what you’re working on. Hit reply and let me know and I may feature you in a Build in Public post.

Till next time,

Mike

Subscribe or follow me on Twitter for more content like this!

Comments ()