Grading Vicuna: An open-source alternative to Llama, Alpaca, and ChatGPT for chat AI

Comparing Vicuna to alternative LLMs like ChatGPT, LLaMA, and Alpaca. Which is the best AI model for building chat apps?

As an AI startup founder, you likely know how impactful large language models like ChatGPT have been in advancing conversational AI. However, with commercial licensing costs, censorship issues, degraded performance, privacy concerns, and black boxes, these proprietary models remain out of reach for many startups.

This is where an open-source project called Vicuna comes in. Developed by a team of researchers from institutions like Stanford, Vicuna is an open-source conversational model achieving over 90% of ChatGPT's quality. This makes it an exciting alternative to closed-off models like GPT-4.

Subscribe or follow me on Twitter for more content like this!

In this article, we'll explore what Vicuna is, how it works, its capabilities, and how you may be able to leverage it as an AI founder. We'll see how it stacks up to competitors like ChatGPT and the base LLaMA model. I'll also share some helpful tips and guides you can follow for more information about specific aspects of the model.

We will concentrate on Vicuna-13b for this article, but there are many different-sized models of Vicuna out there for you to try. Let's begin!

Note: Vicuna isn't the only model out there to fine-tune LLaMA for chat. Check out our guides on using LLaMA v2, Alpaca, and LLaMA-v2-chat for conversational applications.

An overview of Vicuna

In the world of conversational AI, we've seen astounding progress recently with models like ChatGPT demonstrating remarkable natural language abilities. However, as a proprietary model with all the issues described above, ChatGPT remains a bad option for many developers. So there's a need for more accessible and open models that can empower innovation in conversational apps.

This is where Vicuna comes in. Developed by researchers from leading institutions like Stanford, Berkeley, and MBZUAI, Vicuna represents cutting-edge open conversational AI. It was created by fine-tuning the LLaMA model on curated dialog data, demonstrating the power of transfer learning from an open source foundation model. Despite being smaller in size than ChatGPT, Vicuna matches its conversational quality and significantly outperforms other open models.

But it's not just about the technology with Vicuna. What makes it truly impactful is its availability under a non-commercial research license. This opens up access to state-of-the-art conversational AI that was previously restricted to only large tech companies. We finally have an open model that can power the next generation of chatbots, virtual assistants, conversational search engines and other innovative applications.

Vicuna's promise has already been demonstrated through cool projects that leverage it. For example, MiniGPT4 used Vicuna to build an intelligent virtual assistant, LLaVA created a conversational search engine with it, and ToolLLaMA taps Vicuna's abilities for natural language content creation. And of course, you may add your own project to this list one day!

For AI developers and startups, Vicuna represents an exciting new opportunity. Its high capability, free availability and permissive research license enables rapid prototyping of conversational apps. Instead of being gated by access to proprietary models, startups can now validate and build products with cutting-edge conversational AI. The playing field just got leveled.

So in summary, Vicuna promises to democratize access to top-tier conversational intelligence. Its emergence represents an important milestone in making open AI models that empower innovation. For any startup looking to leverage conversational AI, Vicuna is definitely a project worth paying attention to!

How was Vicuna created? What makes it special?

The story of Vicuna begins with LLaMA, an open-source language model developed by Meta AI. While capable, LLaMA had no inherent conversational abilities, focusing mainly on language itself rather than the art of conversation. Researchers from institutions including Stanford, Berkeley, and MBZUAI set out to change this. Their goal was to create an open-source conversational model rivaling proprietary chatbots like ChatGPT.

By the way, what's the difference between an AI model trained to process and analyze text (like LLaMA) versus one specialized for chat (like Vicuna)? There are a few key factors that differentiate the two:

- Architecture - Conversational models like Vicuna have an encoder-decoder structure optimized for dialog. The encoder contextualizes the conversation history and current user input. The decoder then generates a relevant response. General language models like LLaMA lack this specialized architecture.

- Training Objective - Models like Vicuna are fine-tuned to maximize performance specifically on conversational tasks. This involves training on dialog datasets to optimize conversational metrics. LLaMA is trained more generally for text, not specialized for dialog.

- Multi-Turn Capabilities - Conversational models need to handle multi-turn conversations, maintaining context and coherency across multiple exchanges. General text models evaluate one input at a time.

- Interactivity - Conversational AI needs to fluidly interact with users, responding to follow-up questions and clarifications. Text evaluation is more passive, lacking user interactivity.

- Evaluation - Conversational models require more complex evaluation beyond text metrics, testing qualities like consistency, nuance, and the ability to gracefully handle inappropriate responses.

Whereas a general language model like LLaMA focuses more on textual analysis and generation, without the requirements specific to dialog agents, the goal of conversational AI is fundamentally more complex than passive text evaluation.

To that end, the researchers who created Vicuna fine-tuned LLaMA on over 70,000 human-ChatGPT dialog exchanges, specializing the model for conversation and teaching it the natural characteristics of text in conversational contexts. This fine-tuning was crucial for giving their version LLaMA the conversational intelligence it previously lacked. Through iterative training on the dialog data, LLaMA was transformed into Vicuna, an encoder-decoder transformer optimized for multi-turn conversations. How well did their efforts work?

Into the arena: Evaluating Vicuna against alternative chat AI models

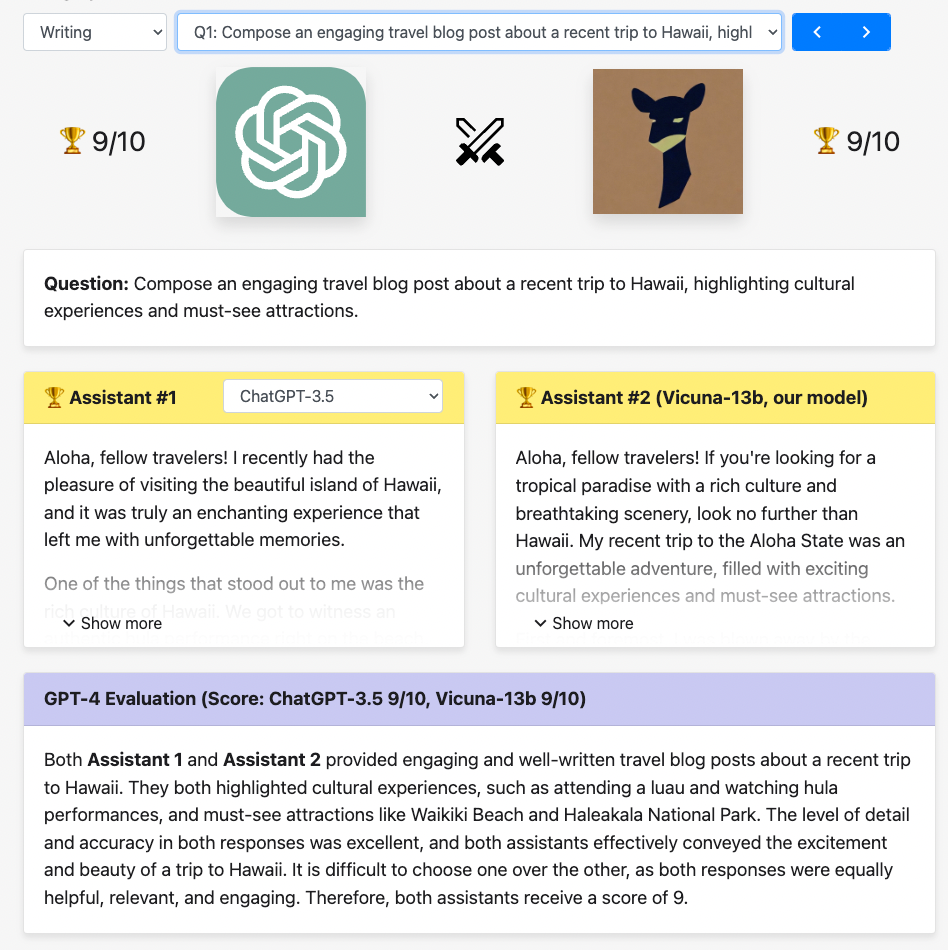

To evaluate Vicuna's capabilities, the researchers tested it on 200 diverse conversational prompts. For further assessment, they had the GPT-4 model comparatively judge Vicuna's responses.

The results demonstrated that Vicuna achieved over 90% of ChatGPT's quality in conversational ability, despite being smaller in size. It significantly outperformed the original LLaMA model.

By combining architecture optimizations with specialized fine-tuning on human dialog data, the researchers successfully enhanced LLaMA into an open-source conversational model on par with proprietary chatbots.

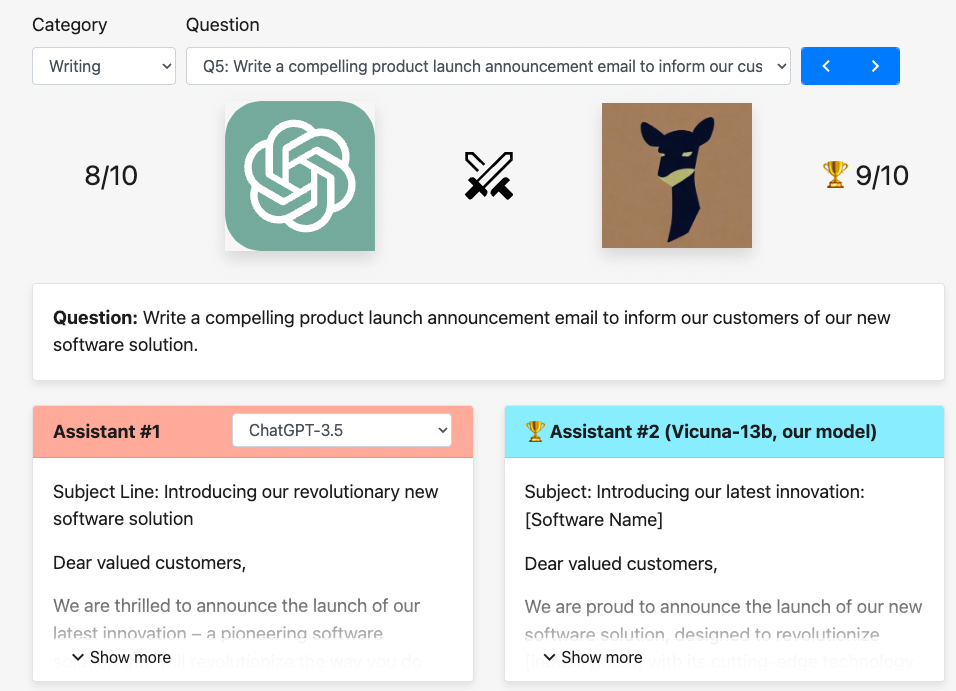

Let's take a look at a head-to-head test as an example, taken from the official project site.

The project site has a collection of example tasks, in which both Vicuna-13b and competing models are asked to go head-to-head. The GPT-4 model is then used as a judge to score each model and state its reasoning. This produces some really eye-opening comparisons. One example is provided in the image above. Another is this challenge, shown below:

- Category: Roleplay

- Question: How would you introduce yourself as a medieval knight at a royal banquet?

LLaMA-13b's response:

"I am a valiant knight, loyal to the king. I will do my utmost to defend his honor and protect his country. (Lame! Scored 7/10 by GPT-4, very generous in my opinion.)"

Vicuna-13b:

"As a medieval knight, I would introduce myself at a royal banquet with a deep bow and a respectful speech, acknowledging my humble origins and my loyalty to the crown. I would say something like:

"My lords and ladies, I am a humble knight, sworn to serve and protect the realm. I come from a long line of warriors who have fought to defend this kingdom for generations. I am honored to be here today, in the presence of such esteemed company, and I hope to earn your trust and respect with my deeds and actions. I pledge my sword, my shield, and my life to the service of my king and my people."

I would then take my seat at the banquet, ready to listen and learn from the wise counsel of my betters, and to serve them in any way I can."

Woah! Way better response from Vicuna. Here's GPT-4's reasoning explaining what makes Vicuna a superior alternative to LLaMA.

Assistant 1 [LLaMA] provided a brief and relevant response, but it lacked the detail and formality expected in a medieval knight's introduction. Assistant 2 [Vicuna], on the other hand, offered a more comprehensive and well-structured response, including a respectful speech that showcased the knight's humility, loyalty, and dedication to the kingdom. This response was more fitting for the context of a royal banquet and demonstrated a better understanding of the medieval knight's role and etiquette.

While LLMs are not infallible judges of other LLMs, neither are humans. I think the use of AI to judge and evaluate AI is a pretty scalable and consistent way to adjudicate which alternatives are the best.

You should check out some of the other competitor answers and tasks on the LMSYS.org site.

Let's try it ourselves: How to build a basic chatbot with Vicuna

Now that we've seen how the model stacks up against some alternatives, let's see how we can build a simple chatbot that we can interact with from our command line. The steps in the guide provided here can be further expanded so you can keep going and build your own chatbot for your AI project!

Step 1: Setup

Install Node.js: Make sure Node.js is installed on your system.

Create a Project Directory: Run the following in your terminal:

mkdir my-chatbot

cd my-chatbot

npm init -y

npm install replicate

Set Your API Token: Replace your_api_token_here with your actual API token:

export REPLICATE_API_TOKEN=your_api_token_here

Step 2: Writing the Chatbot Code

Create a file named chatbot.js, and add the following code:

const Replicate = require("replicate");

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

async function generateResponse(prompt) {

const output = await replicate.run(

"replicate/vicuna-13b:version_hash_here",

{

input: { prompt: prompt },

}

);

return output.items[0];

}

const readline = require('readline');

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

function askQuestion() {

rl.question('You: ', async (userInput) => {

const botResponse = await generateResponse(userInput);

console.log(`Bot: ${botResponse}`);

askQuestion();

});

}

askQuestion();

Replace version_hash_here with the correct version hash for the Vicuna 13b model.

Step 3: Running the Chatbot

Run the chatbot by executing:

node chatbot.js

You can now send a message to your chatbot through the command line!

Don't want to build your own chatbot? You can use this demo to assess how Vicuna performs

The model details page for Vicuna-13b-v1.3 includes a couple demos you can use to play around with the model. Here's an embedded one for you to try (you can also use this link to access it if it's not available at the link below).

This demo, build by zeno-ml, lets you compare models and additional parameters to see how well Vicuna performs against competitors like LLaMA, GPT2, and MPT while also varying temperature or other parameters.

Vicuna's limitations

While conversational technologies have advanced rapidly, models still face important challenges.

- One issue is knowledge grounding. Conversational agents lack sufficient grounding in real factual knowledge, making them prone to plausible-sounding but incorrect responses. More grounding in the real world could improve accuracy.

- Reasoning abilities are another area for improvement. Performing logical reasoning, causal inference and mathematical operations remains difficult for chatbots. Their reasoning capabilities are still limited.

- Evaluating the conversational quality of AI systems at scale also poses difficulties. Current solutions like asking a separate AI judge have flaws. Developing rigorous evaluation frameworks is an open problem.

- Additionally, biases and safety issues persist due to reliance on imperfect training data. Conversational models can sometimes behave inappropriately or unsafely. Better training data curation is important.

- Adapting chatbots to specific users and use cases still proves challenging too. More personalization and customization is needed for different domains. You can't do this out of the box easily.

While great progress has been made, these limitations highlight key areas for improvement. Advancing knowledge grounding, reasoning, evaluation, training data, customization, and deployment efficiency could enable the next level of conversational intelligence with models like Vicuna.

Conclusion: Using Vicuna AI as an open-source alternative to ChatGPT, LLaMA, and other LLMs

The development of Vicuna demonstrates promising progress in advancing open-source conversational AI. By fine-tuning the LLaMA model architecture and training methodology specifically for dialog applications, researchers were able to create a freely available conversational agent competitive with leading proprietary alternatives.

However, work remains to be done to address limitations around reasoning, evaluation, customization, and other areas. While models like Vicuna achieve strong results on many benchmarks, they do not fully replicate comprehensive human conversation. Ongoing research on aligning these models will be important.

Nonetheless, Vicuna represents a valuable step forward in democratizing access to state-of-the-art conversational intelligence. For startups and developers building chatbots, assistants, and other applications, open-source options like Vicuna provide welcome capabilities without restrictive commercial licensing.

The origins and technical details behind Vicuna offer useful insights into specialized training approaches for conversational AI. As research continues, we can expect to see further innovations that build on these methods. The authors behind Vicuna have made an important contribution in open-sourcing such a capable dialog agent.

While more progress is still needed, Vicuna demonstrates the meaningful results that can come from developing open conversational models. For the AI community, it represents a promising step, not the final destination. With continued work on advancing these technologies, the potential ahead remains exciting.

Subscribe or follow me on Twitter for more content like this!

Further reading and resources

You might find these other articles helpful in your quest to build the ultimate chatbot for your new product or project.

- Vicuna-13b model details - An overview of Vicuna with basic model info

- Building chatbots with Vicuna-13B - An article on using Vicuna to create chatbots

- Comparing LLMs for chat: LLaMA v2 vs Vicuna - A comparison between LLaMA's new version 2 and Vicuna

- LangChain chat models: an overview - LangChain is a popular framework for building chat applications

Comments ()