Turn text into high-quality, highly detailed anime with Anything-v3-Better-VAE

High-quality, highly detailed anime image generation for artists and creators with Anything-v3-Better-VAE

Are you an anime enthusiast who's always dreamt of creating your own anime-style images? Or perhaps an artist seeking to take your work to the next level of detail and quality? Well, buckle up because I've got something truly mind-blowing for you!

In this post, I'll be introducing you to the incredible world of the Anything-v3-Better-VAE model, which empowers you to create stunning, high-quality, and highly detailed anime-style images with ease. We'll walk through the nitty-gritty of the model, what it can do, and how to use it effectively. And to top it all off, we'll explore the power of Replicate Codex in finding similar models to expand your creative toolbox. Let's dive in!

About the Anything-v3-Better-VAE Model

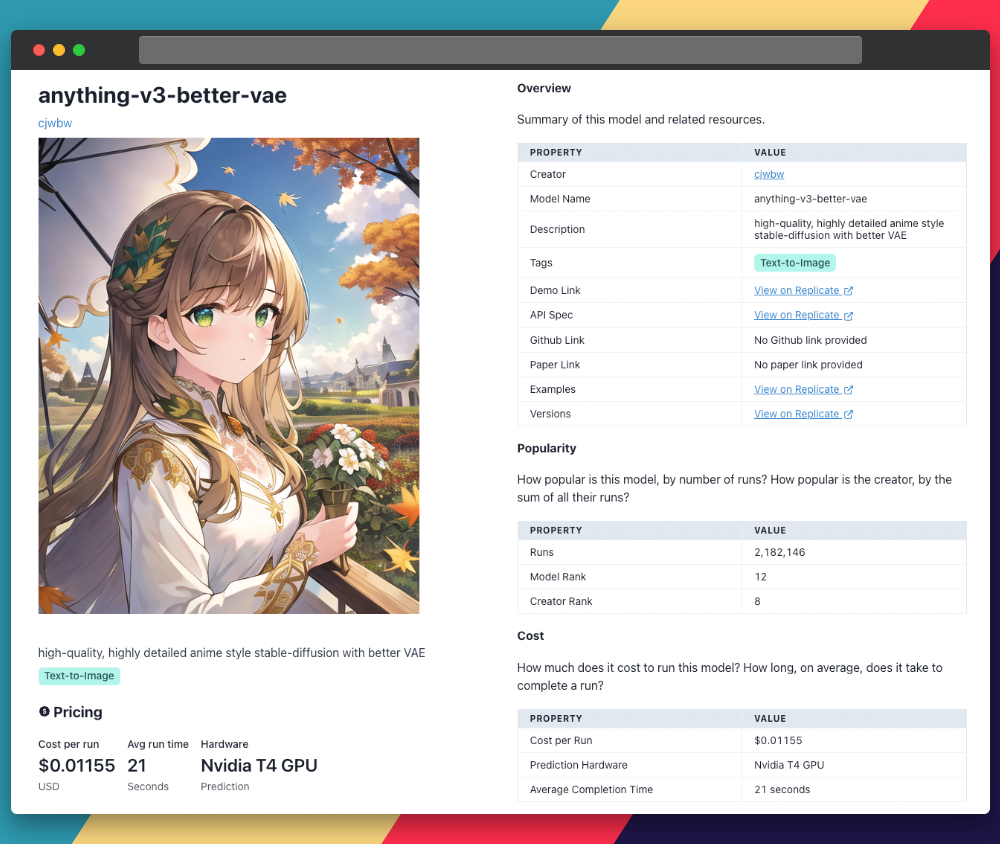

The Anything-v3-Better-VAE model, with more than 2.1M runs, is one of the most popular models on Replicate Codex, ranking 12th in popularity.

It's built on the popular Stable Diffusion model and harnesses the power of an improved Variational Autoencoder (VAE) to generate top-notch anime-style images. A VAE is a neural network architecture that encodes and decodes images to and from a smaller latent space, making computation faster and more efficient.

In the Stable Diffusion model, the diffusion process operates in the autoencoder space rather than pixel space, resulting in a more computationally efficient and memory-friendly approach. This model is especially skilled at handling image distributions, making it a powerful tool for creating anime-style images.

The Anything-v3-Better-VAE model takes this one step further by improving the VAE's decoding capabilities. The result? Finer details are better recovered, making it perfect for rendering images where intricate details matter, such as eyes and text.

However, the model isn't perfect. Since the dataset is filtered and the autoencoder is aggressive, it can struggle with producing text compared to models like Dall-E. But, for most anime-style images, this shouldn't pose a significant problem.

Now that you have a grasp of what the Anything-v3-Better-VAE model is all about, let's dive into understanding its inputs and outputs before we move on to using the model to create our very own high-quality, detailed anime-style images.

Understanding the Inputs and Outputs of the Anything-v3-Better-VAE Model

Inputs

The model accepts several input parameters, such as:

prompt: The input prompt for the image generation.negative_prompt: The prompt or prompts not to guide the image generation (what you don't want to see in the generation). Ignored when not using guidance.width: Width of the output image. Maximum size is 1024x768 or 768x1024 due to memory limits.height: Height of the output image. Maximum size is 1024x768 or 768x1024 due to memory limits.num_outputs: Number of images to output.num_inference_steps: Number of denoising steps.guidance_scale: Scale for classifier-free guidance.scheduler: Choose a scheduler (e.g., DDIM, K_EULER, DPMSolverMultistep, K_EULER_ANCESTRAL, PNDM, KLMS).seed: Random seed. Leave blank to randomize the seed.

Outputs

The model outputs an array of image URIs, where each URI represents a generated image. Here's an example structure:

{

"type": "array",

"items": {

"type": "string",

"format": "uri"

},

"title": "Output"

}Now that we understand the model's inputs and outputs, let's dive into using the model to generate some fantastic anime-style images.

A Step-by-Step Guide to Using the Anything-v3-Better-VAE Model

If you prefer a hands-on approach, you can interact directly with the model's demo on Replicate. This is a great way to play with the model's parameters and get some quick feedback and validation.

For those who are more technical and looking to build a tool on top of this model, follow these simple steps to generate images using the Anything-v3-Better-VAE model on Replicate. In this example, I'll use Node.js to interact with the model on Replicate's platform.

Note: be sure to create a Replicate account and obtain your API key.

Step 1: Install the Node.js client

Install the Replicate Node.js client using the following command:

npm install replicateStep 2: Set up your API token

Copy your API token and authenticate by setting it as an environment variable:

export REPLICATE_API_TOKEN=[token]Step 3: Run the model

Import the Replicate library and run the Anything-v3-Better-VAE model with your desired input parameters:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"cjwbw/anything-v3-better-vae:09a5805203f4c12da649ec1923bb7729517ca25fcac790e640eaa9ed66573b65",

{

input: {

prompt: "masterpiece, best quality, illustration, beautiful detailed, finely detailed, dramatic light, intricate details, 1girl, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden"

}

}

);

What do you get when you run the model? If you supply just the default inputs, you'll get a picture just like the one below:

Changing the subject to be an old man instead gives this image:

You can also use AI to clean up the imperfections in the image. For example, check out this guide to using codeformer to clean up AI-generated pictures!

Taking it Further - Finding Models like Anything-v3-Better-VAE with Replicate Codex

Replicate Codex is an incredible resource for discovering AI models that cater to various creative needs, including image generation, image-to-image conversion, and much more. It's a fully searchable, filterable, tagged database of all the models on Replicate, allowing you to compare models and sort by price or explore by creator. It's free, and it also has a digest email that will alert you when new models come out, so you can try them.

If you're interested in finding similar models to Anything-v3-Better-VAE, follow these steps:

Step 1: Visit Replicate Codex

Head over to Replicate Codex to begin your search for similar models.

Step 2: Use the Search Bar

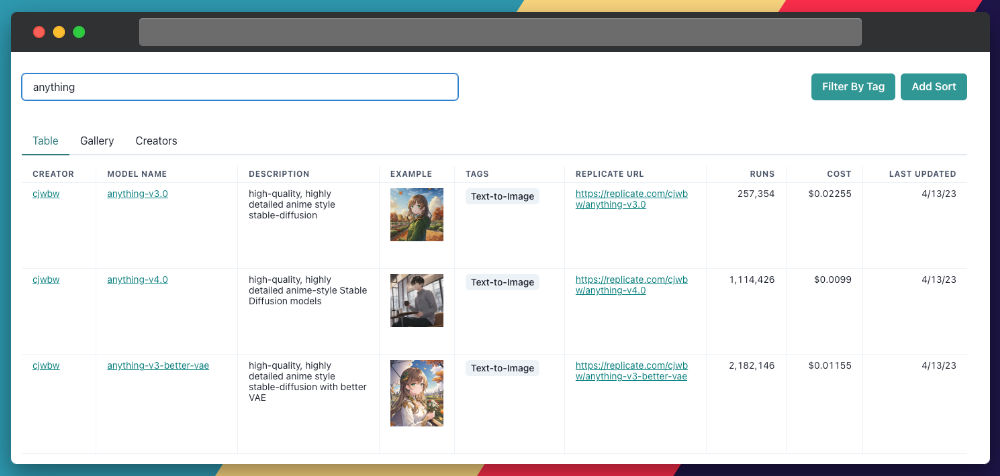

Use the search bar at the top of the page to search for models with specific keywords, such as "anime," "image generation," or "high-quality." This will show you a list of models related to your search query.

Step 3: Filter the Results

On the left side of the search results page, you'll find several filters that can help you narrow down the list of models. You can filter and sort by models by type (Image-to-Image, Text-to-Image, etc.), cost, popularity, or even specific creators.

By applying these filters, you can find the models that best suit your specific needs and preferences. For example, if you're looking for an image generation model that's the most popular or the most cost-effective, you can just search and then sort by the relevant metric.

In this case, I searched by "anything" in the search field and got a couple of other models by the same creator! Maybe you'd like to explore them next. They all seem to be anime-related and can be possible alternatives for your project.

Conclusion

In this guide, we explored the Anything-v3-Better-VAE model, which excels in generating high-quality, highly detailed anime-style images using an improved VAE. We walked through the step-by-step process of using the model with Replicate, as well as understanding the various inputs and outputs.

We also discussed how to leverage the search and filter features in Replicate Codex to find similar models and compare their outputs, allowing us to broaden our horizons in the world of AI-powered image generation.

I hope this guide has inspired you to explore the creative possibilities of AI and bring your imagination to life. Don't forget to subscribe for more tutorials, updates on new and improved AI models, and a wealth of inspiration for your next creative project. Happy image enhancing and exploring the world of AI with Replicate Codex!

Subscribe or follow me on Twitter for more content like this!

Comments ()