RIFE Video Interpolation—AI-Powered Magic for Stunning Video Enhancement

A complete guide to RIFE and video interpolation. Using AI to create smoother videos.

The intersection of video and AI has unlocked a huge potential for creative expression. New AI models are powerful tools for video editors, content creators, and enthusiasts, enabling them to enhance their work in ways that previously required costly and slow editing or expensive processes.

One tool that's caught my eye in this area is RIFE VFI—which stands for Real-Time Intermediate Flow Estimation for Video Frame Interpolation. The model effectively generates additional frames between existing ones, creating a smoother and more visually pleasing video. The applications for this technology are extensive, from improving the quality of low-frame-rate footage to creating stunning slow-motion effects.

In this article, I'll explore the inner workings of the RIFE Video Interpolation model and show you how to use it on Replicate. We'll also see how we can leverage Replicate Codex to find similar models and compare their outputs, broadening our horizons in the realm of AI-powered video interpolation and enhancement.

So, without further ado, let's delve into the captivating world of video interpolation!

About the RIFE Video Interpolation Model

RIFE Video Interpolation is a technology project that uses a method called Real-Time Intermediate Flow Estimation to create extra video frames between existing ones, making videos look smoother. This technology can produce more than 30 frames per second (FPS) when doubling the number of frames in a 720p resolution video, and it works well on a 2080Ti graphics card. Additionally, RIFE allows users to choose any time interval between two images to create these extra frames, making it a versatile and useful tool for people who edit videos and create content.

The model uses advanced AI techniques to estimate the intermediate flow between frames and generate new frames that maintain the visual coherence and smoothness of the original video. This makes it an excellent solution for enhancing video quality, creating slow-motion effects, or simply improving the viewing experience for your audience.

How does RIFE VFI work?

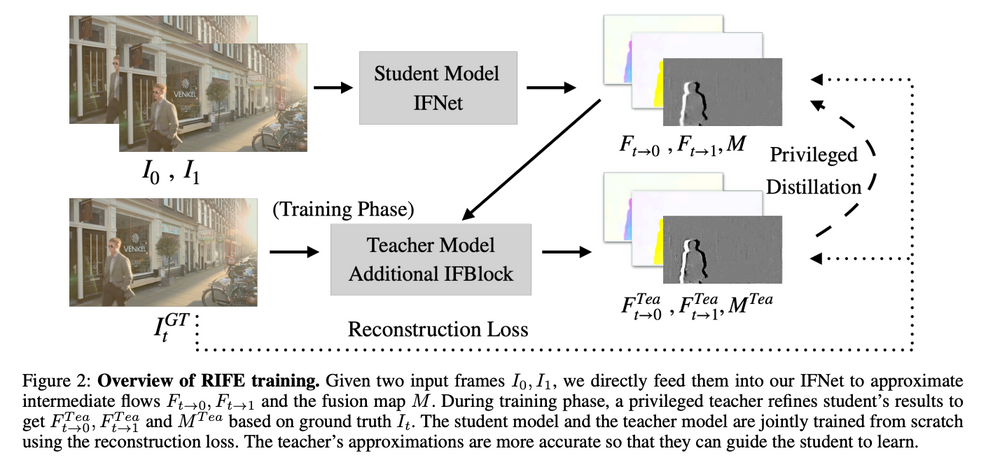

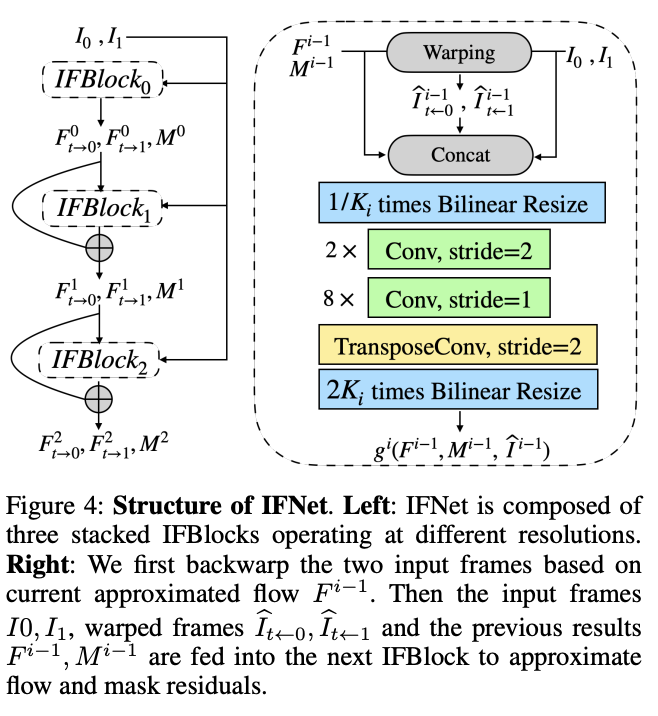

To achieve high-quality interpolation, RIFE utilizes a neural network called IFNet. This neural network is specifically designed to estimate the extra frames, referred to as "intermediate flows," in an end-to-end manner while maintaining high quality and speed. The end-to-end approach means that the entire process, from input to output, is handled by the neural network, resulting in much faster speed compared to traditional methods.

RIFE also employs a special training technique known as "privileged distillation" to ensure effective learning and high performance of the IFNet neural network. Privileged distillation is a training scheme that helps stabilize the learning process and improve the overall performance of the neural network.

One of the key advantages of RIFE is that it doesn't rely on pre-trained optical flow models. Optical flow models are often used to analyze the motion of objects within videos, but RIFE is capable of being trained from scratch without the need for such pre-trained models. This makes RIFE more flexible and adaptable to different video scenarios.

Another notable feature of RIFE is its ability to create extra frames at any desired time interval between two original frames, a capability known as "arbitrary-timestep frame interpolation." It achieves this using a feature called "temporal encoding input," which allows users to specify the desired time interval for interpolation.

When tested on several public benchmarks, RIFE demonstrated exceptional performance compared to other popular video frame interpolation methods, such as SuperSlomo and DAIN. Specifically, RIFE was found to be 4 to 27 times faster than these methods while producing superior-quality video interpolation.

Furthermore, the ability of RIFE to handle arbitrary time intervals through temporal encoding means that it has the potential to be used in a wide range of applications beyond just video frame interpolation. Its versatility and high performance make it a valuable tool for video editors, content creators, and various other applications in the media and entertainment industry.

In this post, I'll show you how we can use Replicate to run RIFE with just a few basic API calls and some simple Node.js code. Replicate is a platform that lets you run all kinds of AI models, including many other Video-to-Video models.

Understanding the Inputs and Outputs of the RIFE Video Interpolation Model

Before we start working with RIFE, let's take a look at the model inputs and outputs. In this example, we'll be working with Replicate's RIFE implementation, which runs a model uploaded by pollinations.

Inputs

The RIFE Video Interpolation model requires the following inputs:

video(file): The input video file you want to interpolate.interpolation_factor(integer): The interpolation factor. For example, a value of 4 means generating 4 intermediate frames for each input frame. The default value is 4.

Outputs

The output of the RIFE Video Interpolation model is a URI string containing the generated video with interpolated frames. The output schema from this model is a simple JSON object:

{

"type": "string",

"title": "Output",

"format": "uri"

}Now that we understand the inputs and outputs of the model, let's dive into a step-by-step guide on how to use it.

A Step-by-Step Guide to Using the RIFE Video Interpolation Model

If you're not up for coding, you can interact directly with the RIFE Video Interpolation model's "demo" on Replicate via their UI. You can use this link to interact directly with the interface and try it out! This is a nice way to play with the model's parameters and get some quick feedback and validation.

If you're more technical and looking to eventually build a cool tool on top of this model, you can follow these simple steps to interpolate your videos using the RIFE Video Interpolation model on Replicate.

Note: Be sure to create a Replicate account first! You'll need your API key for this project.

Step 1: Install the Replicate Node.js Client

Install the Replicate Node.js client by running the following command:

npm install replicateStep 2: Authenticate with Your API Token

Copy your API token and authenticate by setting it as an environment variable:

export REPLICATE_API_TOKEN=[token]

Step 3: Run the Model

Import the Replicate package and run the RIFE Video Interpolation model using the following code:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"pollinations/rife-video-interpolation:245bd8a7c6179cee7ae745432e1d9e23c74b90232fbd835f9703c53bb372f031",

{

input: {

video: "..."

},

interpolation_factor: 4 // Change this value as needed

}

);

In this example we can use a sample video clip as our input. This is shown at this link.

Replicate also allows us to specify a webhook URL that will be called when the prediction finishes. For example:

const prediction = await replicate.predictions.create({

version: "245bd8a7c6179cee7ae745432e1d9e23c74b90232fbd835f9703c53bb372f031",

input: {

video: "..."

},

webhook: "https://example.com/your-webhook",

webhook_events_filter: ["completed"]

});When the run completes, the result will be available in the JSON format specified above. Here's what our output looks like - note the smoother frame rate.

Taking it Further - Finding Other Video Interpolation Models with Replicate Codex

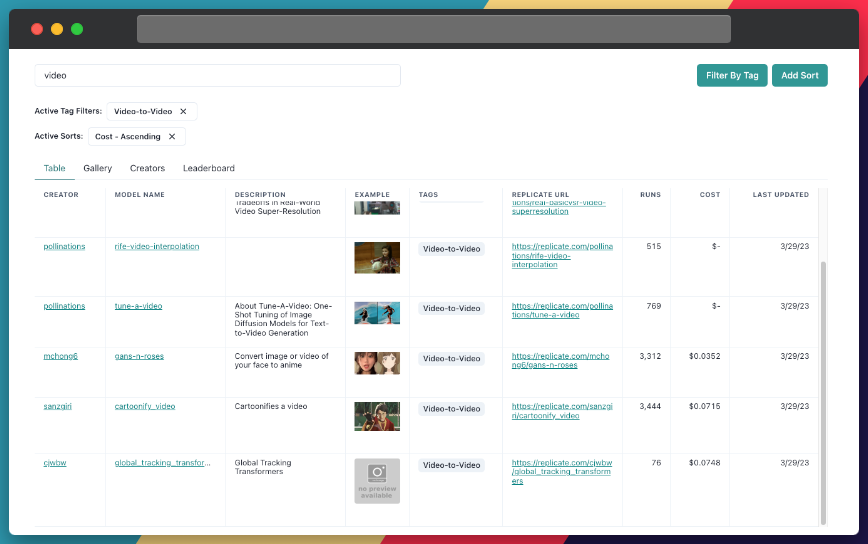

Replicate Codex is a fantastic resource for discovering AI models that cater to various creative needs, including video interpolation, image generation, image-to-image conversion, and much more. It's a fully searchable, filterable, tagged database of all the models on Replicate, and also allows you to compare models and sort by price or explore by the creator. It's free, and it also has a digest email that will alert you when new models come out so you can try them.

If you're interested in finding similar models to RIFE Video Interpolation...

Step 1: Visit Replicate Codex

Head over to Replicate Codex to begin your search for similar models.

Step 2: Use the Search Bar

Use the search bar at the top of the page to search for models with specific keywords, such as "video interpolation," "frame interpolation," or "slow-motion." This will show you a list of models related to your search query.

Step 3: Filter the Results

On the left side of the search results page, you'll find several filters that can help you narrow down the list of models. You can filter and sort by models by type (Image-to-Image, Text-to-Image, etc.), cost, popularity, or even specific creators.

By applying these filters, you can find the models that best suit your specific needs and preferences. For example, if you're looking for a video interpolation model that's the cheapest, you can just search and then sort by cost.

Conclusion

In this guide, we explored the RIFE Video Interpolation model and learned how to use it for enhancing our video content. We saw how we can use it to add frames to make our video look smoother, and learned about its inner workings. We also discussed how to leverage the search and filter features in Replicate Codex to find similar models and compare their outputs, allowing us to broaden our horizons in the world of AI-powered video interpolation and enhancement.

I hope this guide has inspired you to explore the creative possibilities of AI and bring your imagination to life. Don't forget to subscribe for more tutorials, updates on new and improved AI models, and a wealth of inspiration for your next creative project. Thanks for reading. Happy video enhancing and exploring the world of AI with Replicate Codex!

Subscribe or follow me on Twitter for more content like this!

Comments ()