Finally, a model to paint 3D meshes with high-res UV texture maps

Finally, we have an AI tool that can paint high-res UV maps on 3D meshes using text or image prompts.

Texturing 3D models is an important part of creating realistic 3D graphics and environments (I'm working on a game where this is critical right now!). But, manually creating high-quality textures for 3D models can be tedious and time-consuming. Recent advancements in AI are enabling automated systems to generate textures for 3D models based on text descriptions or example images.

A new paper from researchers at Tencent presents an AI system called Paint3D that can generate high-quality 2K resolution texture maps for 3D models conditioned on either text or image prompts. This could significantly improve workflow for 3D artists and designers. Let's see how it works.

Subscribe or follow me on Twitter for more content like this!

The Importance of Texture Maps in 3D Graphics

In 3D computer graphics, a texture map refers to an image applied onto the surface of a 3D model to represent details like color, patterns and materials. The texture map is wrapped onto the 3D geometry through a process called UV mapping which flattens the 3D surface into a 2D space.

High-quality texture maps are crucial for creating realistic 3D renders. However, texturing complex 3D assets requires extensive manual painting to cover the UV space appropriately. This makes texturing one of the most labor-intensive parts of 3D content creation.

Difficulties with AI-Generated 3D Textures

Recent advances in AI have brought us text and image-to-image models like DALL-E and Stable Diffusion. Researchers have tried applying these 2D AI models to generate textures for 3D shapes.

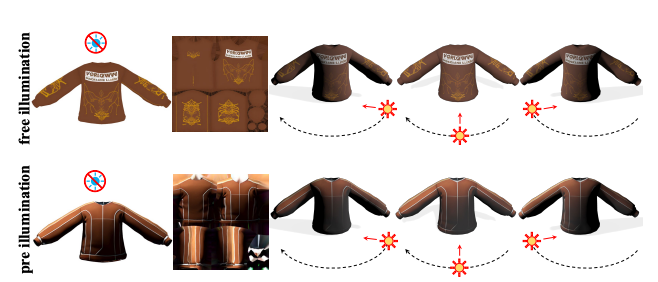

One approach is view-based texture generation, which samples 2D views of a 3D model, generates matching textures for each view, and composites them together. However, a key challenge arises - the generated textures may contain pre-baked lighting and shadows. This makes the textures unsuitable for re-lighting the 3D model in new environments, as they need to be lighting-independent.

Another approach is gradient-based texture optimization directly on the 3D surface using 2D loss functions. But this struggles to handle complex textures well.

To address these issues, the Paint3D method proposes a coarse-to-fine framework to generate lighting-less 2K resolution textures. As the paper says: "The key challenge addressed is generating high-quality textures without embedded illumination information, which allows the textures to be re-lighted or re-edited within modern graphics pipelines." Let's see how the authors solved this problem.

Overview of Paint3D Framework

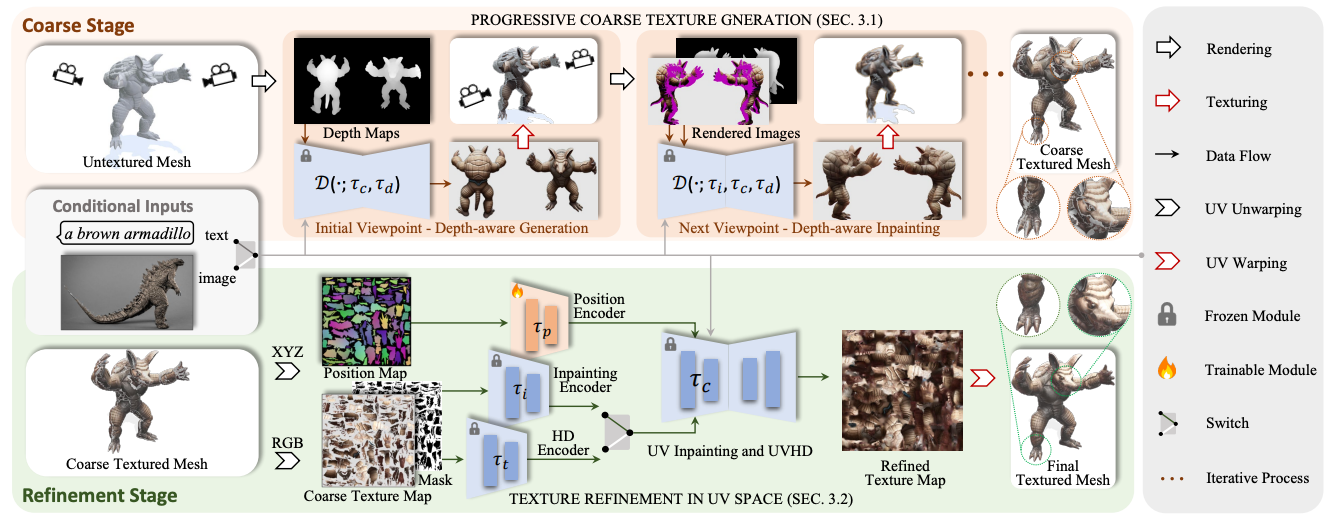

Paint3D introduces a new two-stage coarse-to-fine approach to generate lighting-independent textures for 3D models.

In the coarse texturing stage, it renders depth maps of the 3D model from multiple viewpoints around the object. These depth maps provide geometric information about the model's surface from different angles.

Paint3D feeds these multi-view depth maps along with the text or image prompt into a pre-trained conditional diffusion model called ControlNet. This enables sampling a series of view-dependent texture images aligned with the model's 3D shape.

By back-projecting these rendered texture images onto the 3D surface, Paint3D creates an initial coarse texture map. Areas visible from more views get projected with texture more times, improving coverage.

In the refinement stage, Paint3D applies specialized diffusion models in UV space to further enhance this coarse texture. UV space provides a 2D unwrapping of the 3D texture.

It uses a UV inpainting model to fill any remaining holes or incomplete regions in the unwrapped texture map. This model leverages the 3D surface position data for coherence.

Finally, it employs a UV super-resolution model to enhance texture details and resolution. This produces a polished, lighting-independent 2K texture optimized for the 3D shape.

Quantitative Comparisons

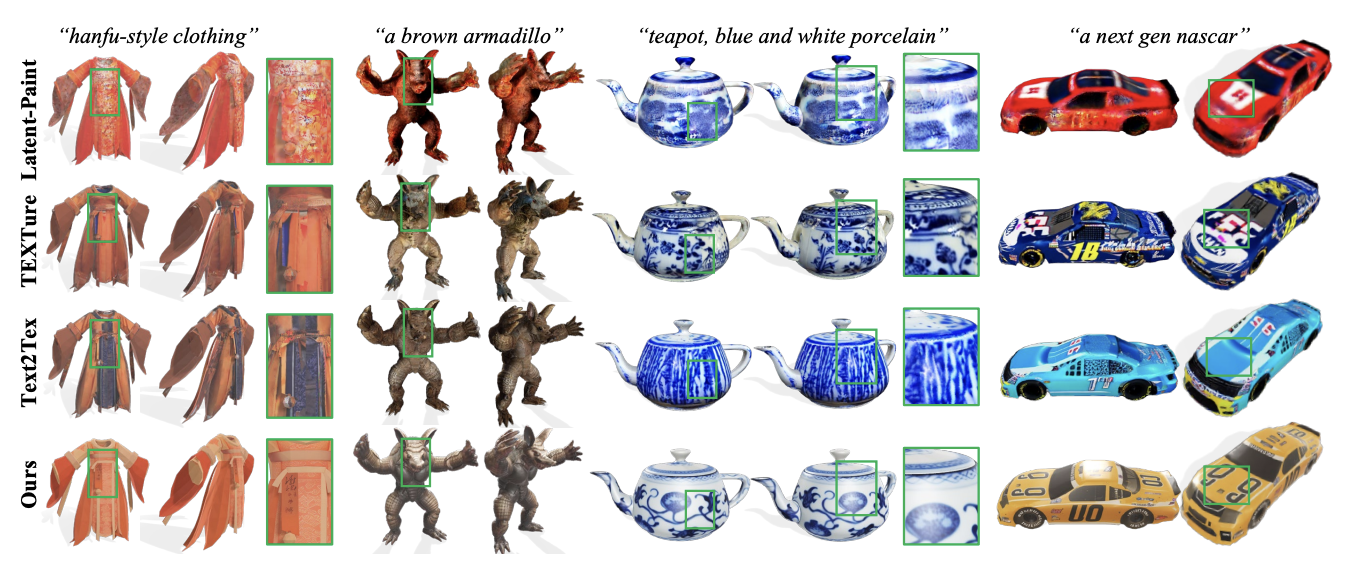

The paper presents quantitative experiments benchmarking Paint3D against prior state-of-the-art methods like TEXTure, Text2Tex, and LatentPaint. Paint3D significantly improves image quality metrics like FID and KID compared to these baselines. User studies also show the generated textures have higher fidelity, realism, and completeness versus other approaches.

Results and Limitations

Paint3D demonstrates improvements over prior state-of-the-art methods in image quality metrics. User studies also show the generated textures have higher fidelity, realism and completeness. You can see some examples on the project Github and judge for yourself.

The framework generalizes across diverse 3D object categories. However, limitations exist in handling certain material properties like glossiness or normal maps. The image-to-geometry translation also remains difficult.

Nonetheless, Paint3D represents an important advance in AI-assisted content creation. Automating tedious texture painting will greatly accelerate 3D workflows. The applications span gaming, VR, visual effects, and beyond.

We can expect text-to-3d progress as generative models continue to mature. Paint3D points to a future where AI can automate more and more of a human creator's workflow. Can't wait to see where this goes!

By the way, if you found this article interesting, there's a few more 3D AI models and articles you may want to check out:

- How to use an AI 3d model generator to create a DnD character

- AI 3D Model Generators: Overview and Use Cases

- Transforming 2D Images into 3D with the AdaMPI AI Model

- 3D Model Generation with AI: A Deep Dive into the Point-E Model

- AI 3D Model Generator: Exploring the Shap-e Model

- Creating stylized clouds with Shader Graph and Shuriken in Unity 3D

Subscribe or follow me on Twitter for more content like this!

Comments ()