Demystifying cogvideo: Your Guide to NightmareAI's Text-to-Video AI Model

Discover how the cogvideo AI model can bring your text-based ideas to life with stunning videos.

Picture this: you're lounging on your couch, idly daydreaming about the novel you've been working on. You find yourself wishing for a way to transform your prose into vibrant, visual scenes, but the thought of learning video editing or animation seems daunting. Enter cogvideo, an AI model by nightmareai, that can turn your written words into dynamic video content. And lucky for you, you've stumbled onto the perfect guide that can help you do just that.

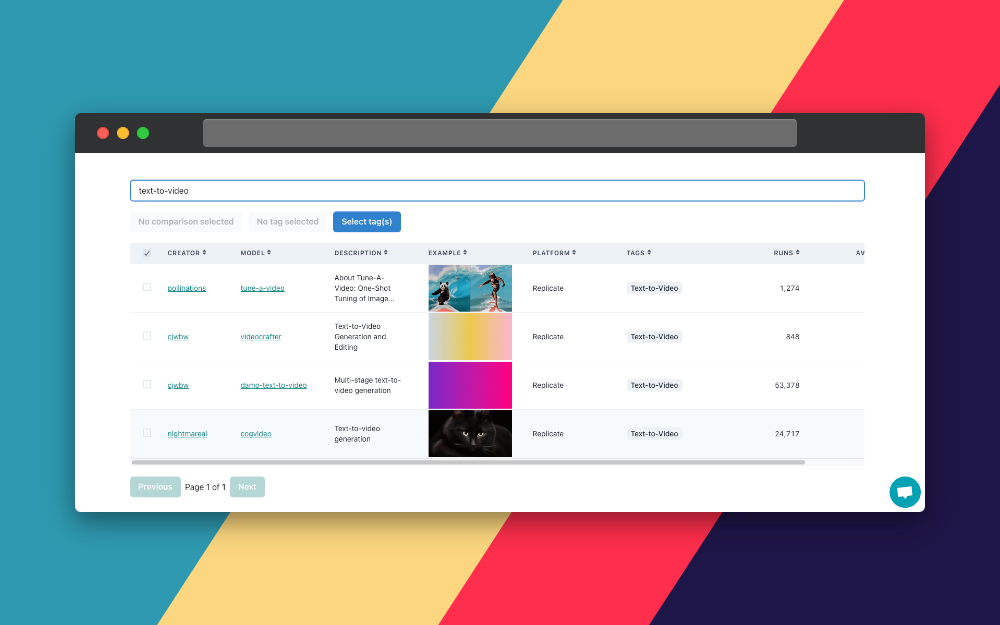

a black catIn this guide, we'll demystify cogvideo, currently sitting at a respectable rank 138 on AIModels.fyi, delve into its inner workings, and show you how to create your own video from text. We'll also see how we can use AIModels.fyi to find similar models and decide which one we like. So grab a cup of coffee, sit back, and let's dive in.

About cogvideo

Cogvideo is an AI model created by nightmareai, designed for text-to-video generation. It translates written prompts into visual outputs, effectively turning your text into a movie. How cool is that? You can find more detailed information about cogvideo on its detail page.

The model's performance relies on an Nvidia A100 (40GB) GPU, and while the average run time isn't specified, the results are usually impressive and worth the wait. And the icing on the cake? Cogvideo has been run 24,717 times, making it one of the most popular text-to-video AI models out there.

Understanding the Inputs and Outputs of cogvideo

Before we dive into how to use cogvideo, it's crucial to understand its inputs and outputs.

Inputs

Cogvideo requires several inputs to function:

- Prompt: The text string you want to transform into video.

- Seed: An integer that helps in generating random outputs. The default value is -1 which picks a random seed.

- Translate: A boolean value that determines if the prompt is translated from English to Simplified Chinese.

- Both_Stages: When checked, the model runs both stages of its processing, providing a comprehensive output. If unchecked, the output is quicker but contains fewer frames.

- Use_Guidance: If checked, stage 1 guidance is used which is generally recommended.

- Image_Prompt: This is an optional input. It's an image file used as the starting point for the video.

Outputs

The output of cogvideo is a sequence of URIs that contain the frames of the generated video. The output schema looks like this:

{

"type": "array",

"items": {

"type": "string",

"format": "uri"

},

"title": "Output",

"x-cog-array-type": "iterator"

}

Now, with our knowledge of inputs and outputs, let's move on to the next step: running cogvideo to generate a video from our text.

Using cogvideo: Step-by-step Guide

If the mere thought of code sends shivers down your spine, fear not! You can interact with cogvideo's demo directly on Replicate via their UI. It's a fun

way to play with the model's parameters and see the results. For the brave coders among us, this guide will walk you through interacting with cogvideo's Replicate API.

Step 1: Install the Replicate Client

If you're using Node.js, first, install the Replicate client using npm:

npm install replicate

Next, copy your API token and set it as an environment variable:

export REPLICATE_API_TOKEN=<paste-your-token-here>

Step 2: Run the Model

Now, you're all set to run the model with your desired inputs:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"nightmareai/cogvideo:00b1c7885c5f1d44b51bcb56c378abc8f141eeacf94c1e64998606515fe63a8d",

{

input: {

prompt: "..."

}

}

);

Step 3: Setting Up a Webhook (Optional)

You can set a webhook URL to be notified when the prediction is complete. Here's how:

const prediction = await replicate.predictions.create({

version: "00b1c7885c5f1d44b51bcb56c378abc8f141eeacf94c1e64998606515fe63a8d",

input: {

prompt: "..."

},

webhook: "https://example.com/your-webhook",

webhook_events_filter: ["completed"]

});

Webhooks are incredibly handy if you'd like to perform an action as soon as the model has completed its prediction.

Taking it Further - Finding Other Text-to-Video Models with AIModels.fyi

With so many fantastic models out there, why stop at one? AIModels.fyi is a treasure trove for discovering AI models that cater to various creative needs, from image generation to text-to-video conversion.

Step 1: Visit AIModels.fyi

Head over to AIModels.fyi to kickstart your hunt for other exciting models.

Step 2: Use the Search Bar

The search bar at the top is your best friend. Enter terms related to text-to-video models, and it'll present a list of models related to your query.

Step 3: Filter the Results

On the left side of the search results page, you'll find several filters to refine your search. You can sort models by type, cost, popularity, or even by creator. Looking for a text-to-video model that's popular and cost-effective? Just adjust the filters accordingly.

Conclusion

In this guide, we took a deep dive into the cogvideo model, its inputs and outputs, and how to use it for text-to-video generation. We also explored how to leverage AIModels.fyi to discover similar models, opening up a world of AI-powered content creation possibilities.

I hope this guide has ignited your curiosity about the endless creative opportunities AI has to offer. For more tutorials and updates on new and exciting AI models, don't forget to subscribe. Check out my Twitter for more updates, and dive into these additional guides and resources. Enjoy your journey exploring the world of AI with AIModels.fyi and remember, the only limit is your imagination. Happy video creating!

Subscribe or follow me on Twitter for more content like this!

Comments ()