A Beginner's Guide to Line Detection and Image Transformation with ControlNet

Unleashing the Power of ControlNet-Hough AI Model: Beginner's Guide

Ever felt stuck while trying to use one image to create another? Well, worry no more. With the ControlNet-Hough model, you can transform your images easily and effectively.

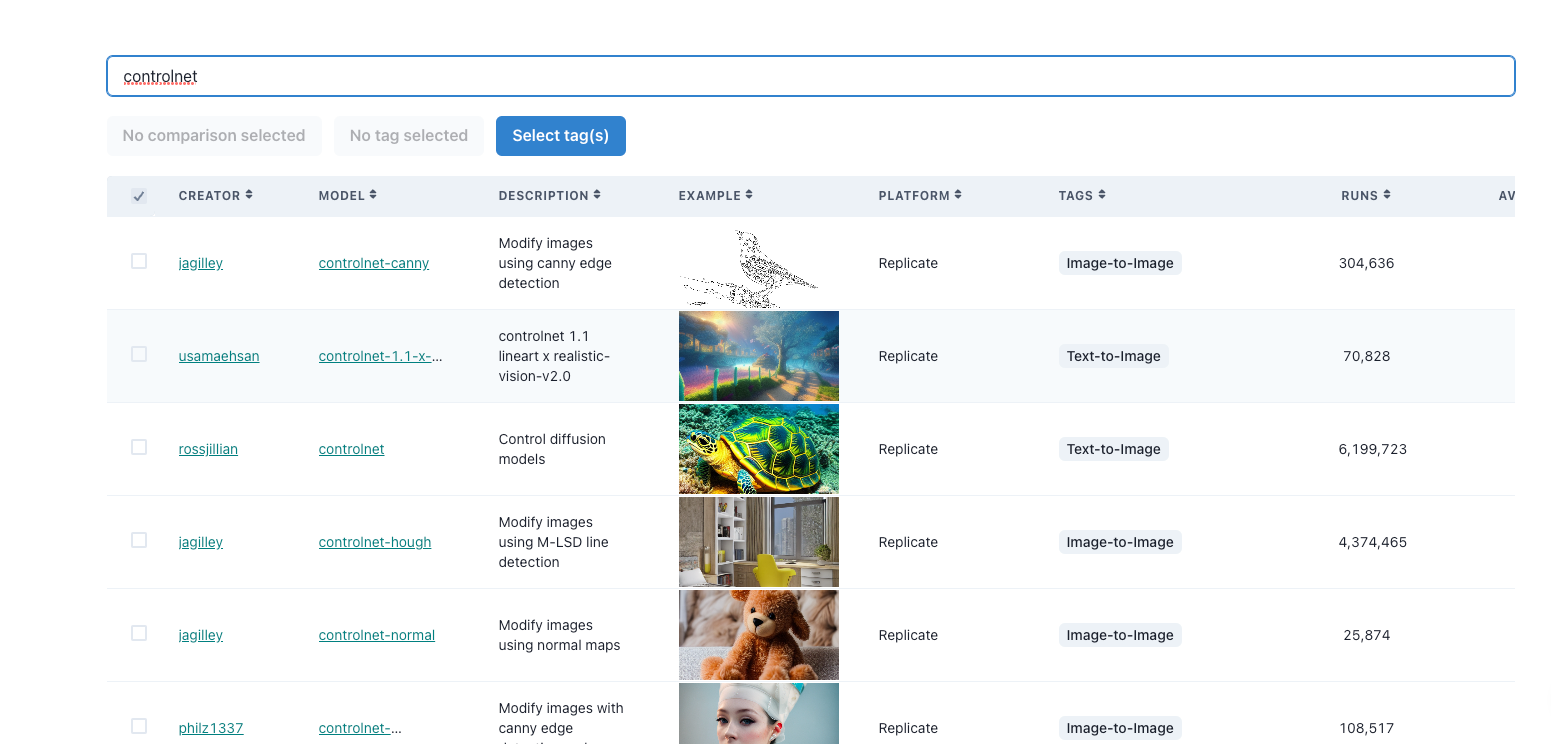

In this guide, we'll walk you through the nitty-gritty of ControlNet-Hough, an AI model that stands at 11th rank on AIModels.fyi. We'll also see how we can use AIModels.fyi to find similar models and decide which one we like. So, buckle up, let's dive right in!

About the ControlNet-Hough Model

This gem of a model, ControlNet-Hough, is developed by jagilley, a prominent figure in the AI modeling world. It uses M-LSD line detection to modify images, essentially creating unique, altered versions of your images.

ControlNet-Hough is in the Image-to-Image category, meaning it takes an image as an input and spits out another image as an output. The processing cost for each run is $0.0161 USD and the average completion time is just 7 seconds, thanks to the Nvidia A100 (40GB) GPU it uses.

It's important to note that this model has been run over 4,374,465 times, which speaks volumes about its efficiency and popularity.

Understanding the Inputs and Outputs of the ControlNet-Hough Model

As we embark on this journey of understanding the model, let's break down its Inputs and Outputs.

Inputs

The ControlNet-Hough Model takes several inputs, including:

image file: This is the main input, the image you want to modify.prompt string: Your prompt for the model.num_samples string: The number of samples you want (use with caution as higher values may cause out of memory errors).image_resolution string: The desired resolution of the generated image.ddim_steps integer: This pertains to steps.scale number: The guidance scale.seed integer: This sets the seed for generating the output.eta number: The value for eta (DDIM).a_prompt string: An added prompt, defaulting to "best quality, extremely detailed".n_prompt string: A negative prompt, defaulting to "longbody, lowres, bad anatomy, bad hands, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality".detect_resolution integer: Resolution for detection (only applicable when model type is 'HED', 'Segmentation', or 'MLSD').value_threshold number: Value threshold (only applicable when model type is 'MLSD').distance_threshold number: Distance threshold (only applicable when model type is 'MLSD').

Outputs

The output of this model is an array of URI strings representing the modified images.

Now that we're equipped with the knowledge of Inputs and Outputs, let's learn how to interact with this model.

Using the ControlNet-Hough Model

Whether you're comfortable with code or prefer a more visual approach, there's an option for you. You can directly interact with the model's "demo" on Replicate via their UI. But, if you prefer coding, here's how to interact with the ControlNet-Hough Model.

Step 1: Install Replicate

First, you need to install the Node.js client by running npm install replicate.

Step 2: Copy your API token

Next, authenticate by setting your API token as an environment variable: export REPLICATE_API_TOKEN=<paste-your-token-here>. You can find your API token in your account settings.

Step 3: Run the model

Finally, run the ControlNet-Hough model using the following code:

import Replicate from "replicate";

const replicate = new Replicate({

auth: process.env.REPLICATE_API_TOKEN,

});

const output = await replicate.run(

"jagilley/controlnet-hough:854e8727697a057c525cdb45ab037f64ecca770a1769cc52287c2e56472a247b",

{

input: {

image: "..."

}

}

);

Remember, you can also set a webhook to be called when the prediction is complete. Check out the webhook docs for details on setting that up.

Taking it Further - Finding other Image-to-Image Models with AIModels.fyi

AIModels.fyi is a fantastic resource for discovering AI models catering to various creative needs, including image generation, image-to-image conversion, and much more.

Step 1: Visit AIModels.fyi

Head over to AIModels.fyi to begin your search for similar models.

Step 2: Use the Search Bar

Use the search bar at the top of the page to search for models with specific keywords, such as "image-to-image" or "line detection". This will show you a list of models related to your search query.

Step 3: Filter the Results

On the results page, you'll find several filters that can help you narrow down the list of models. You can filter and sort by models by type, cost, popularity, or even specific creators.

Conclusion

In this guide, we explored how to interact with and use the ControlNet-Hough model, either through coding or via a direct UI. We also discussed how to leverage the search and filter features in AIModels.fyi to find similar models and compare their outputs, allowing us to broaden our horizons in the world of AI-powered image transformation.

I hope this guide has inspired you to explore the creative possibilities of AI and bring your imagination to life. Don't forget to subscribe for more tutorials, updates on new and improved AI models, and a wealth of inspiration for your next creative project. For more guides and resources, check out the notes. You can also follow my adventures on Twitter. Happy image enhancing and exploring the world of AI with AIModels.fyi!

Subscribe or follow me on Twitter for more content like this!

Comments ()