Lemur: Training Language Models to Be Better Coding Agents

A new model combines language and code abilities into a single system to create a better coding agent.

Artificial intelligence has made astounding progress recently, with large language models like GPT-3, PaLM, and ChatGPT demonstrating remarkable human-like abilities in natural conversation. However, simply excelling at chit-chat is not sufficient - we aspire to develop AI systems that can effectively act in the world as capable, autonomous agents. This necessitates models that not only comprehend language but can also execute complex actions grounded in diverse environments.

A new paper from researchers at the University of Hong Kong introduces Lemur and Lemur-Chat, open-source (Github is here) language models designed to achieve a harmonious balance of conversational and coding capabilities. This synergistic blend empowers the models to seamlessly follow free-form instructions, leverage tools, adapt based on feedback, and operate more effectively as agents across textual, technical, and even simulated physical environments.

Subscribe or follow me on Twitter for more content like this!

The Evolution from Language Model to Language Agent

For AI systems to evolve from conversant bots like ChatGPT to fully-fledged agents that can get things done, they need to master three key faculties - human interaction, reasoning, and planning. Modern LLMs have shown remarkable progress in the first two dimensions through their natural language prowess. However, planning and executing actions in messy real-world environments also requires grounding in technical contexts.

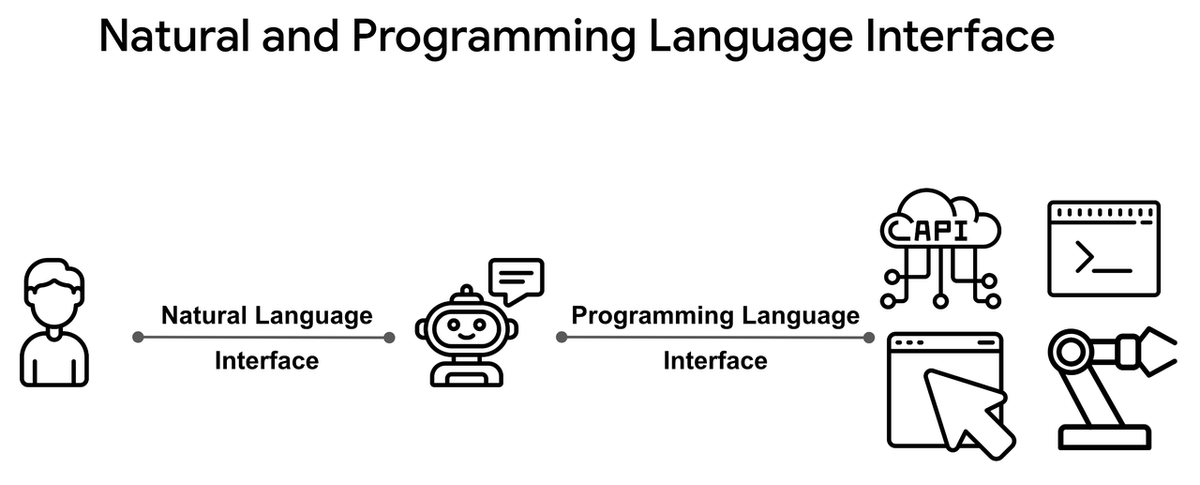

Humans fluidly (well... sometimes fluidly) combine natural communication with symbolic programming languages to coordinate objectives and precision in complex scenarios. We discuss objectives in free-flowing natural speech but rely on languages like Python when intricate planning and precise execution are needed. AI agents need to harness both capacities effectively too.

Unfortunately, most existing LLMs pursue language or coding exclusivity instead of synergy. Popular conversational models like LLaMA and Claude prefer textual pretraining while coding-focused alternatives like Codex limit natural interfaces. This hinders their versatility as agents.

The researchers identify this gap and highlight the importance of unifying natural and programming language abilities. They introduce Lemur as an attempt to balance both forces within a single open-source LLM.

Related reading: Microsoft Researchers Announce CodePlan: Automating Complex Software Engineering Tasks with AI

Pretraining Unifies Language and Code

Lemur begins with LLaMA-2, an open-source conversational LLM from Anthropic. The base model underwent additional pretraining on a massive 90 billion token dataset blending natural language and code in a 10:1 ratio. This boosted its coding capabilities significantly while still retaining language fluency.

The pretraining corpus incorporated diverse textual data from Wikipedia, news, webpages, and books. For programming content, it featured Python, SQL, Bash, and other scripts from code repositories like GitHub. Meticulous deduplication ensured high-quality data.

This pretraining regimen resulted in Lemur - an LLM with more balanced mastery of both natural and programming languages compared to existing alternatives.

Instruction Tuning Optimizes Following Directions

After pretraining Lemur, the researchers further optimized it to follow instructions through instruction tuning. This technique trains models to effectively execute directions in free-form written or spoken language.

The instruction tuning compiled 300,000 examples from diverse sources including crowdsourced human conversations, ChatGPT dialogs, reasoning tasks solved by GPT-4, and programming solutions generated by instructing ChatGPT.

Fine-tuning on this multi-domain instructional data resulted in Lemur-Chat - a more capable instruction-following agent ready for complex language-based control.

Results: Mastering Both Language and Code Benchmarks

Comprehensive evaluations on 8 language and coding benchmarks confirmed Lemur's more balanced mastery of both domains compared to specialized alternatives.

Lemur exceeded LLaMA-2, purely focused on language, by 4.3% overall across textual and coding tests. Crucially, it also surpassed Codex, a coding-centric LLM from Anthropic, by 1.9% overall despite Codex's programming focus.

This demonstrates how Lemur strikes an effective balance instead of over-indexing on one domain. The harmonization empowers it to handle diverse tasks needing both fluent language and precise programmatic control.

Lemur-Chat further improved on Lemur by an additional 14.8%, showcasing the benefits of instruction tuning for agent abilities.

Agent Tests Confirm Versatility Across Environments

While classical benchmarks provide insights, researchers developed more realistic agent tests to assess model capabilities. These span diverse scenarios demanding reasoning, tool usage, debugging, feedback handling, and exploring unknown environments.

Lemur-Chat excelled in 12 out of 13 agent evaluations, outperforming specialized conversational (LLaMA) and coding (Codex) counterparts. Key findings:

- Tool use: Lemur-Chat leveraged Python interpreters and Wikipedia to enhance reasoning better than others.

- Debugging: It best utilized error messages to fix and refine code.

- Feedback handling: Lemur-Chat improved the most when given natural language advice.

- Exploration: The model surpassed alternatives in simulated cybersecurity, web browsing, and home navigation challenges requiring incremental exploration.

The consistent versatility highlights Lemur's potential as a general-purpose agent adept at higher-level reasoning, lower-level programmatic control, and handling messy real-world environments.

Closing the Gap Between Open Source and Proprietary

Lemur-Chat matched or exceeded the performance of commercial models like GPT-3.5 across most benchmarks. This narrows the gap between open-source and proprietary agents.

The researchers attributed Lemur's balanced language-coding foundation for its flexibility across diverse agent challenges. They posit that unlocking such versatility necessitates unifying both natural and programming language mastery within a single model.

By open-sourcing Lemur and Lemur-Chat, the team aims to promote more research into capable multi-purpose agents. The code availability also allows custom enhancements tailored to specialized use cases.

The Road Ahead for Language Agent Research

This research provides valuable insights into training more capable real-world AI agents. The results show the efficacy of pretraining on blended textual and programming data followed by multi-domain instruction tuning.

Lemur offers an open-source springboard to develop assistants that integrate fluid human conversation with precise technical control. Such cohesion mirrors how humans combine natural speech and symbolic languages for complex coordination.

With platforms like Lemur, the evolution from conversant bots to competent, generalist agents can accelerate. There remains significant room for improvement. However, by sharing optimal training paradigms based on blended language-coding foundations, this study lights the path ahead.

The ultimate vision is AI agents that can understand free-form instructions in natural language, reason about appropriate responses, plan context-aware actions, and execute them reliably in diverse unpredictable environments. Lemur brings this goal one step closer.

Subscribe or follow me on Twitter for more content like this!

Comments ()