Microsoft Researchers Announce CodePlan: Automating Complex Software Engineering Tasks with AI

Automating complex software engineering tasks with AI: A deep dive into CodePlan.

Software engineering often involves making widespread changes across an entire codebase. Tasks like migrating to a new database system, updating to a new API, or fixing bugs that span multiple files require developers to make many intricate, interdependent code edits. Researchers refer to these as "repository-level" coding tasks, and they present a major challenge in software development today.

These repository-level tasks are extremely complex, error-prone, and time-consuming to perform manually. However, recent advances in AI and natural language processing may provide a solution. In a recent paper, researchers from Microsoft propose a new system called CodePlan that automates repository-level coding tasks by orchestrating a large language model.

In this post, I'll summarize CodePlan's approach and key findings. I'll also dive deeper into the motivation behind this work, the details of CodePlan, results from an extensive evaluation, why this matters for software engineering, limitations, and directions for future work. Let's get started!

By the way, you can subscribe to get these research updates delivered straight to your inbox:

Subscribe or follow me on Twitter for more content like this!

The Challenge of Repository-Level Coding Tasks

To understand the motivation behind CodePlan, we first need to appreciate the difficulties posed by repository-level coding tasks. As mentioned, these tasks require making changes that touch many parts of a codebase.

For example, say you want to migrate your project from using Logging Framework A to the newer Logging Framework B. Framework B has a different API, so you'll need to update every place in your code that uses logging to use the new B framework classes and methods. This requires meticulously finding and editing all logging code across potentially hundreds of files in your repository.

Other examples are updating your ORM framework, switching out a utility library, upgrading API endpoints, or addressing breaking changes from library updates. In each case, you need to propagate changes across multiple components and files, while maintaining consistency and correctness.

This is extremely tedious and error-prone to do manually. You need to track down every instance of the code you want to change, make sure edits don't break dependencies or cause bugs, and test thoroughly. It's easy to miss spots that need to be updated, make mistakes, or introduce new issues.

Performing these coordinated changes across large, real-world codebases is a major pain point in software development. It's a perfect example of a complex "outer loop" coding task that could benefit enormously from automation.

CodePlan: Automating Repository Tasks with LLMs and Planning

This is where CodePlan comes in. The researchers recognized that large language models like GPT-3, while extremely capable, struggle to directly solve repository-level coding challenges. The entire codebase is often too large to fit into the model prompt. And LLMs have no built-in notion of planning or validity when making changes.

So the authors devised a method to decompose the overall repository task into incremental steps, guided by the LLM's localization strength but augmented with rigorous planning and analysis.

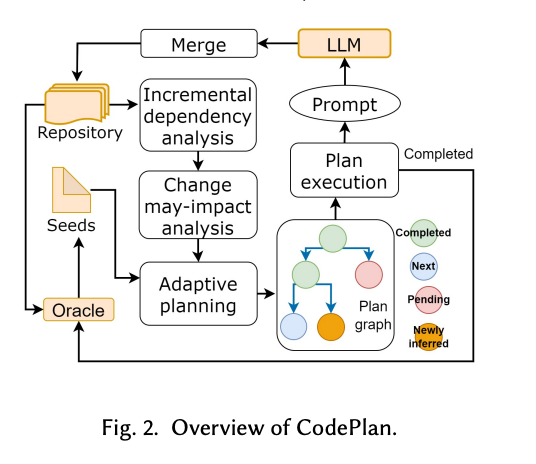

Here is how CodePlan works at a high level:

- Start with some "seed edit specifications" as the initial changes required. For our logging example, this might be to replace

loggerA.Log(msg)withloggerB.WriteLog(msg) - Make these seed edits across the codebase by prompting the LLM on small code snippets.

- After each edit, perform static analysis to incrementally update syntactic and semantic dependency information for the code.

- Determine what other blocks of code each edit might impact based on the dependencies.

- Use this impact analysis to adaptively plan the next set of edits required.

- Continuously edit and analyze code blocks guided by the plan until all required changes are made.

So in essence, CodePlan leverages the localization strengths of large language models for making edits. But it directs these edits to maintain validity across the full repository by tracking impacts and planning new steps based on dependency analysis.

This allows it to automate complex coding tasks that naive LLM use would fail at. The planning and analysis also allows CodePlan to handle very large codebases that won't fit entirely into a model prompt.

Evaluating CodePlan on Real-World Tasks

The researchers evaluated CodePlan on two challenging repository-level coding tasks:

API Migration

This involves updating a codebase to changes in an external library or tools. Examples are migrating from one unit testing framework to another or upgrading to a new version of a utility library.

Temporal Code Edits

This requires making a sequence of connected code changes from some initial seed edits. For instance, refactoring a function signature and propagating those changes.

For both tasks, they tested CodePlan on proprietary Microsoft repositories and popular open-source GitHub projects ranging from thousands to over 15,000 lines of code. Some required edits to as many as 168 files.

They compared CodePlan against baseline methods like attempting to use LLM edits alone without planning (for API migration) and purely reactive approaches that just fix errors as they arise (for temporal edits).

The results demonstrated CodePlan's strengths:

- It identified 2-3x more correct code blocks to modify compared to baselines.

- The final code edits aligned significantly better with the ground truth changes.

- CodePlan produced valid final code repositories without build issues. The baselines often had many errors.

- It required far fewer overall edits to complete tasks thanks to planning.

So on real-world repositories and tasks, CodePlan succeeded where less sophisticated application of LLMs failed.

Why Repository-Level Automation Matters

Automating complex repository-level coding tasks would have major benefits:

- Developer productivity: These tasks are time-consuming and drain developer time. Automating them allows devs to focus on higher-level problems.

- Code quality: Humans make mistakes, especially during large-scale changes. Automation reduces errors.

- Reliability: Repository tasks like migrations are often hard to test thoroughly. Bugs can creep in. AI assistance improves reliability.

- Accessibility: Junior devs often avoid big codebase changes. Automation makes tasks more accessible.

- Cost: Maintaining and updating legacy systems requires significant resources. Intelligent automation cuts costs.

So automating challenging coding tasks has the potential to both improve developer productivity and software quality/reliability.

CodePlan also expands AI's impact into complex "outer loop" development challenges, beyond assisting with localized coding. Intelligently combining language model capabilities with rigorous planning and analysis opens up new possibilities.

Limitations and Future Work

The CodePlan authors acknowledge some key limitations in the current research:

- It relies on static analysis for tracking code dependencies and impacts. Dynamic dependencies at runtime are not captured, which limits tasks it can handle.

- Evaluation was limited to a small set of repositories and tasks. More extensive testing on additional languages, codebases, and challenges is needed.

- It focuses on editing one code block at a time serially. Techniques for parallel multi-block editing could help in some cases.

- It uses only source code files. Expanding to other artifacts like configs and dependencies would make it more robust.

- No customization of the change analysis was done. Incorporating task-specific impact rules could improve planning decisions.

To address these limitations and build on the promising CodePlan approach, the researchers identified several areas for future work:

- Expanding the framework to more languages, artifacts, and software projects

- Enhancing the dependency graph to model dynamic relationships like dataflow

- Exploring ways to edit multiple related code blocks in parallel

- Customizing the analysis and planning stages for specific task types

- Combining rule-based techniques with learned models for improved change planning

Conclusion

In this post, we covered CodePlan - an AI system for automating complex repository-level coding tasks. CodePlan shows how intelligently combining language models with rigorous planning and analysis can expand AI's impact to challenging software development workflows.

The results demonstrate that CodePlan can successfully automate tricky tasks like API migrations and temporal code edits across real-world codebases where less sophisticated applications of LLMs fail.

While limitations remain, I'm excited by the possibilities this unlocks. Automating rote coding work allows developers to focus on high-value problems. And improving productivity and software quality through AI has enormous benefits for delivering better technical products.

Repository-level automation has been a major blindspot for AI in software engineering until now. CodePlan offers a promising path to expand AI's advantages into this crucial domain. I can't wait to see what the future holds as this research continues!

Comments ()