Towards zero-shot NeRFs: New HyperFields method generates 3D objects from text

Training AI to generate 3D objects from text descriptions

Recent breakthroughs in natural language processing have enabled AI systems to generate 2D images from text prompts with striking realism and detail. But extending these capabilities to 3D content creation remains extremely challenging. A new technique called HyperFields demonstrates promising progress towards flexible, efficient 3D geometry generation directly from language descriptions.

Subscribe or follow me on Twitter for more content like this!

The Broader Context

Photorealistic image generation from text prompts has rapidly advanced thanks to diffusion models like DALL-E 2 and stable diffusion. These leverage enormous datasets of image-text pairs to learn mappings between language and 2D image distributions.

However, far less 3D data is available compared to 2D images. And modeling the complexities of real-world 3D geometry is fundamentally more difficult than 2D images. As a result, text-to-3D generation remains a major unsolved problem in AI research despite intense interest.

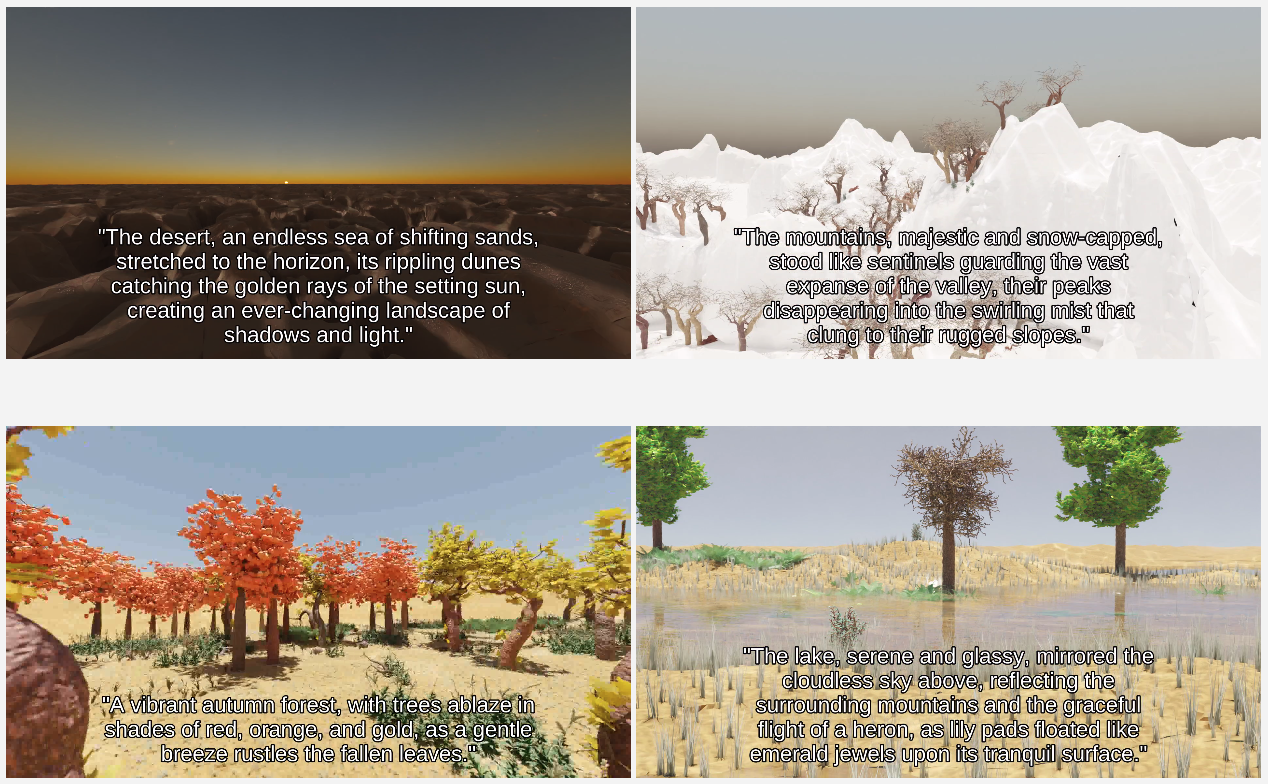

Solving this challenge could enable creators to manifest 3D objects, scenes, or even virtual worlds using only their imagination and language descriptions. This could dramatically expand 3D content creation for applications like gaming, CGI, VR/AR, and design.

Technical Background

A popular 3D representation used in generative modeling is neural radiance fields (NeRFs). NeRFs encode scenes as neural networks that output the color and density of 3D points viewed from arbitrary camera angles. They can represent complex geometry and appearance effects.

Recent text-to-3D methods use iterative optimization to guide NeRF generation based on text prompts. But optimizing a full NeRF from scratch for each new prompt is extremely slow, taking hours or days per 3D object.

HyperFields aims to learn a general mapping between text embeddings and NeRF geometry. If successful, this approach could generate NeRFs for new text prompts efficiently in a single forward pass, without slow optimization.

The HyperFields Approach

HyperFields combines two key technical innovations to accomplish fast, flexible text-to-3D generation:

- Dynamic Hypernetwork - This overarching neural network takes in text embeddings and progressively predicts the weights for each layer of a separate "child" NeRF network. Crucially, the weight predictions are conditioned not only on the text, but also on the previous activations within the NeRF layers themselves. This contextual conditioning allows the hypernetwork to specialize the geometry generation based on the interim outputs.

- NeRF Distillation - First, individual NeRFs are pretrained on specific text prompts using existing techniques. Then, the weights of those NeRFs are distilled into the hypernetwork through a novel loss function. This provides dense training signal to learn the complex text-to-NeRF mapping across a variety of distinct objects like "blue chair."

Results

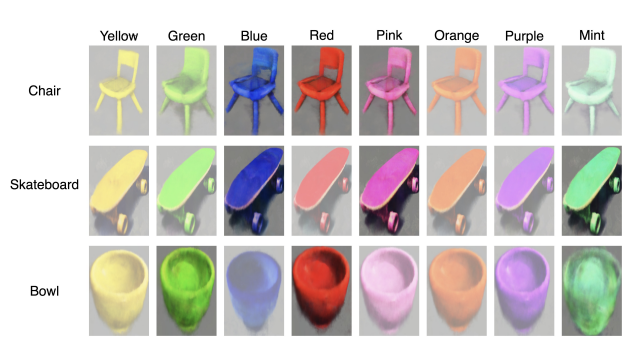

Experiments demonstrate that HyperFields can:

- Encode over 100 distinct objects like "yellow vase" within a single model

- Generalize to new combinations like "orange toaster" without seeing that exact prompt before

- Rapidly adapt to generate completely new objects like "golden blender" with minimal fine-tuning

These capabilities exceeded previous state-of-the-art methods by 5-10x in terms of sample efficiency and wall-clock convergence time.

Qualitative assessments also show HyperFields can produce realistic 3D geometries matching text descriptions, with proper shapes and attributes. This suggests it effectively learns some semantic correlations between language and 3D forms.

Limitations and Future Work

While promising, HyperFields does have some limitations:

- Still requires a small number of optimization steps for completely new objects

- Relies on existing 2D guidance systems which limits output flexibility

- Vocabulary and shape diversity is constrained to the training data

- Generated geometry lacks very fine-scale details for now

Ongoing research aims to overcome these limitations by expanding the diversity and scale of training data, iterating on the model architecture, and replacing 2D guidance with more flexible 3D supervision.

The ultimate goal is to create a model that can conjure any imaginable 3D object or scene from language alone, with no constraints on vocabulary or geometry. We are still far from that goal but techniques like HyperFields represent encouraging steps in the right direction.

Conclusion

Text-to-3D generation remains an extremely challenging problem at the frontiers of AI research. Recent methods relying on costly per-example optimization have proven too slow and inflexible for practical use. HyperFields has a promising approach based on learning a generalized mapping from language to 3D geometry representations. This paradigm demonstrates faster, more flexible 3D content creation from text prompts across a variety of objects. While there are some limitations, improvements to the technique could unlock text-to-3D capabilities that expand the creative potential of millions of designers, artists, and creators worldwide. Very promising!

Subscribe or follow me on Twitter for more content like this!

Comments ()