3D-GPT: A new method for procedural Text-to-3D model generation

3D-GPT employs LLMs as a multi-agent system with three collaborative agents for procedural 3D generation

A new AI system called 3D-GPT demonstrates promising capabilities for text-to-3D generation through leveraging large language models. The system aims to streamline 3D content creation by interpreting natural language instructions and controlling procedural generation tools to automatically construct 3D assets.

Let's take a look at what the researchers have managed to build and assess the impact of this paper.

Subscribe or follow me on Twitter for more content like this!

The Challenge of Automated 3D Content Creation

Producing 3D models and environments typically requires extensive human effort. Artists meticulously craft each 3D asset, gradually shaping basic shapes into intricate finished products using tools like Blender. Some approaches to automating parts of this process utilize procedural generation, where parameters and rulesets can be tuned to algorithmically produce content. But defining these generators themselves also demands deep understanding of 3D modeling techniques.

This has driven growing interest in leveraging AI to learn and replicate human 3D construction abilities. Text-to-3D generation has emerged as a promising direction, exploring how to produce 3D content from textual descriptions. The hope is AI systems might understand language well enough to match or even exceed human artistic skills.

How 3D-GPT Focuses on Language-Controlled Procedural Generation

3D-GPT tackles a subset of the text-to-3D challenge by focusing specifically on leveraging language understanding to control existing procedural generation tools. This is a different approach from some other text-to-3D modeling tools out there that I've written about previously, like Shap-e and Point-e.

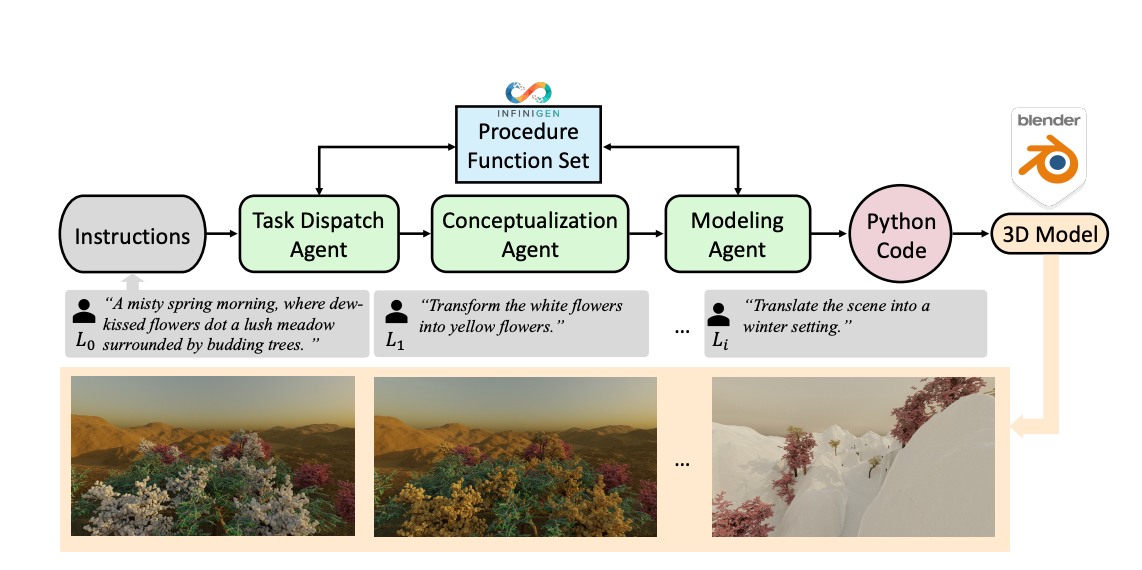

The system proposed in the paper comprises three main AI agents built using LLMs:

- A task dispatch agent analyzes input instructions and determines which procedural generator functions are necessary to produce the described scene or asset.

- A conceptualization agent enriches the initial text descriptions by inferring and adding extra detail about object appearance, materials, lighting, and other attributes.

- A modeling agent sets the parameters for selected generator functions based on the enriched text, and outputs Python code to interface with 3D software like Blender.

This approach aims to simplify 3D creation by having the AI agents parse instructions, understand functions of the procedural generators, infer needed parameter values, and automatically generate code to drive the 3D tools.

Assessing 3D-GPT's Results and Limitations

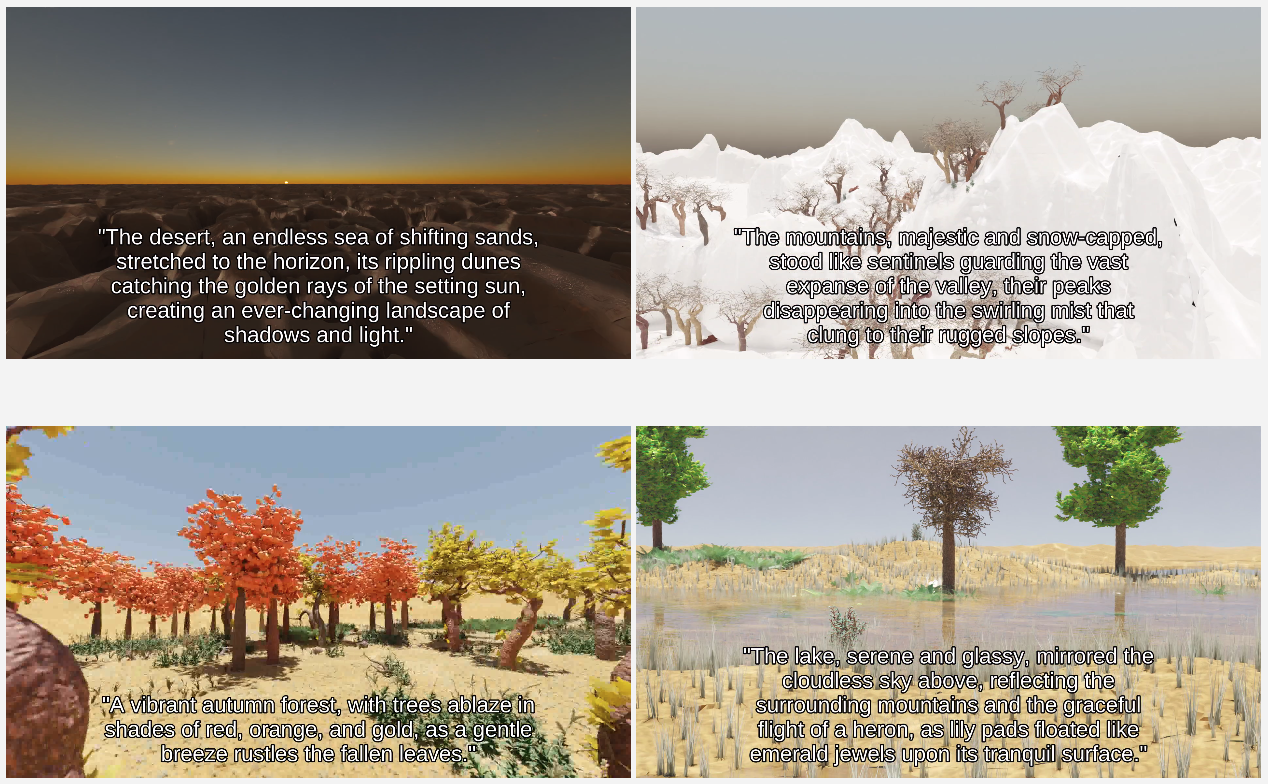

The researchers demonstrate 3D-GPT generating complete scenes from phrases like "lush meadow with flowers" that match the descriptive text. In sequences of instructions, it also modifies outputs according to directives like "change flower colors." Quantitative metrics indicate higher similarity between outputs and text when using conceptualization and task dispatch agents compared to ablations without them.

However, these results also expose 3D-GPT's constraints. The system relies completely on existing procedural algorithms, limiting possible outputs to generators it has access to. It cannot handle more open-ended descriptions or make creative inferences about 3D assets. The outputs also lack finer details, textures, and realistic imperfections that human artists could sculpt.

The examples focus on simpler scenes and geometry. For intricate curves and shapes like tree branches, it resorts to default primitives or captures limited aspects like the tree's overall size. This likely stems from the lack of specialized 3D modeling knowledge or training data. That being said, I think some of the example generations shown do well enough!

While promising as an interface to leverage LLMs with procedural generators, these factors inhibit 3D-GPT's real-world usefulness. The reliance on existing rules hinders the flexibility and quality required for the production 3D applications.

I'll also note that the code for this project is not available publicly yet but the authors plan to make it available after the paper is accepted. They do have some great example video demonstrations on the project site.

Key Takeaways on AI Progress and Challenges

3D-GPT moves towards richer language-based control for procedural 3D generation, but reaches only initial steps on the path towards true mastery of modeling from textual descriptions. Core technical abilities like geometric reasoning and artistic insight remain lacking.

However, the competent tool usage and planning exhibited suggests LLMs are gaining some capacity to tackle complex 3D tasks. With future advances, LLM-based systems could potentially recapture far more of the nuanced artistry and creativity that human modelers possess.

Research initiatives in areas like further scaling LLMs, training on 3D data, and expanding beyond text inputs provide promising routes to address current limitations. 3D-GPT offers a template for continued exploration of LLMs' emergent skills in understanding and operating within 3D environments. But substantial challenges remain to develop AI that can match, or perhaps one day surpass, human artistic prowess in modeling 3D worlds.

Subscribe or follow me on Twitter for more content like this!

Comments ()