A Plain English Guide to Reverse-Engineering Reddit's Source Code with LangChain, Activeloop, and GPT-4

Reverse-engineering Reddit's public source code using GPT-4 and Activeloop with Langchain code understanding

Imagine writing a piece of software that could understand, assist, and even generate code, similar to how a seasoned developer would.

Well, that's possible with LangChain. Leveraging advanced models such as VectorStores, Conversational RetrieverChain, and LLMs, LangChain takes us to a new level of code understanding and generation.

Subscribe or follow me on Twitter for more content like this!

In this guide, we will reverse engineer Reddit's public source code repository for version 1 of the site to better understand the codebase and provide insights into its inner workings. I was inspired to create this guide after reading Paul Graham's tweet on the subject (and because I don't know anything about Lisp, but still wanted to understand what he was talking about).

We'll use OpenAI's embedding technology and a tool called Activeloop to make the code understandable, and an LLM (GPT-4 in this case) to converse with the code. If you're interested in using another LLM or a different platform, check out my previous guide on reverse-engineering Twitter's algorithm using DeepInfra and Dolly.

When we're done, we're going to be able to shortcut the difficult work it will take to understand the algorithm by asking an AI to give us answers to our most pressing questions, rather than spending weeks sifting through it ourselves. Let's begin.

A Conceptual Outline for Code Understanding with LangChain

LangChain is a powerful tool that can analyze code repositories on GitHub. It brings together three important parts: VectorStores, Conversational RetrieverChain, and an LLM (Language Model) to assist you in understanding code, answering questions about it in context, and even generating new code within GitHub repositories.

The Conversational RetrieverChain is a system that helps find and retrieve useful information from a VectorStore. It uses smart techniques like context-aware filtering and ranking to figure out which code snippets and information are most relevant to the specific question or query you have. What sets it apart is that it takes into account the history of the conversation and the context in which the question is asked. This means it can provide you with high-quality and relevant results that specifically address your needs. In simpler terms, it's like having a smart assistant that understands the context of your questions and gives you the best possible answers based on that context.

Now, let's look into the LangChain workflow and see how it works at a high level:

- Index the code base: The first step is to clone the target repository you want to analyze. Load all the files within the repository, break them into smaller chunks, and initiate the indexing process. If you already have an indexed dataset, you can even skip this step.

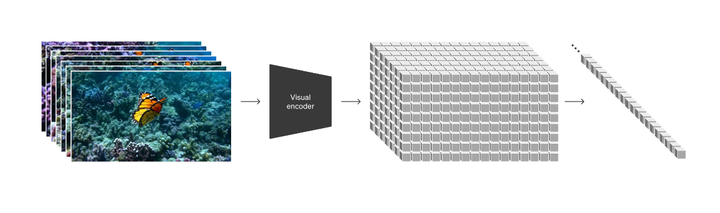

- Embedding and Code Store: To make the code snippets more easily understandable, LangChain employs a code-aware embedding model. This model helps in capturing the essence of the code and stores the embedded snippets in a VectorStore, making them readily accessible for future queries.

- Query Understanding: This is where your LLM comes into play. You can use a model like GPT-4 to process your queries. The model is used to analyze your queries and understand the meaning behind them by considering the context and extracting important information. By doing this, the model helps LangChain accurately interpret your queries and provide you with precise and relevant results.

- Construct the Retriever: Once your question or query is clear, the Conversational RetrieverChain comes into play. It goes through the VectorStore, which is where the code snippets are stored, and finds the code snippets that are most relevant to your query. This search process is very flexible and can be customized to fit your requirements. You have the ability to adjust the settings and apply filters that are specific to your needs, ensuring that you get the most accurate and useful results for your query.

- Build the Conversational Chain: Once you have set up the retriever, it's time to build the Conversational Chain. This step involves adjusting the settings of the retriever to better suit your needs and applying any additional filters that might be required. By doing this, you can narrow down the search and ensure that you receive the most precise, accurate, and relevant results for your queries. Essentially, it allows you to fine-tune the retrieval process to obtain the information that is most useful to you.

- Ask questions: Now comes the exciting part! You can ask questions about the codebase using the Conversational Retrieval Chain. It will generate comprehensive and context-aware answers for you. Your LLM, being part of the Conversational Chain, takes into account the retrieved code snippets and the conversation history to provide you with detailed and accurate answers.

By following this workflow, you'll be able to effectively use LangChain to gain a deeper understanding of code, get context-aware answers to your questions, and even generate code snippets within GitHub repositories. Now, let’s see it in action, step by step.

Step-by-Step Guide

Let's dive into the actual implementation.

1. Acquiring the Keys

To get started, you'll need to register at the respective websites and obtain the API keys for Activeloop and OpenAI.

2. Setting up the indexer.py file

Create a Python file, e.g., indexer.py, where you'll index the data. Import the necessary modules and set the API keys as environment variables.

import os

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import DeepLake

from dotenv import load_dotenv

# Load environment variables from .env

load_dotenv()

embeddings = OpenAIEmbeddings(disallowed_special=())

3. Cloning and Indexing the Target Repository

Next, we'll clone the Reddit algorithm repository, load, split, and index the documents. You can clone the algorithm from this link.

root_dir = './reddit1.0-master'

docs = []

for dirpath, dirnames, filenames in os.walk(root_dir):

for file in filenames:

try:

loader = TextLoader(os.path.join(dirpath, file), encoding='utf-8')

docs.extend(loader.load_and_split())

except Exception as e:

pass

4. Embedding Code Snippets:

Next, we use OpenAI embeddings to embed the code snippets. These embeddings are then stored in a VectorStore, which will allow us to perform an efficient similarity search.

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_documents(docs)

username = "mikelabs" # replace with your username from app.activeloop.ai

db = DeepLake(dataset_path=f"hub://{username}/reddit-source", embedding_function=embeddings) # dataset would be publicly available

db.add_documents(texts)

print("done")

5. Utilizing GPT-4 to Process and Understand User Queries

Now we set up another Python file, question.py, to use GPT-4, a language model available with OpenAI, to process and understand user queries.

6. Constructing the Retriever

We construct a retriever using the VectorStore we created earlier.

db = DeepLake(dataset_path="hub://mikelabs/reddit-source", read_only=True, embedding_function=embeddings) # use your username

retriever = db.as_retriever()

retriever.search_kwargs['distance_metric'] = 'cos'

retriever.search_kwargs['fetch_k'] = 100

retriever.search_kwargs['maximal_marginal_relevance'] = True

retriever.search_kwargs['k'] = 10

7. Building the Conversational Chain

We use the Conversational Retrieval Chain to link the retriever and the language model. This enables our system to process user queries and generate context-aware responses.

model = ChatOpenAI(model_name='gpt-4') # switch to gpt-3.5-turbo if you want

qa = ConversationalRetrievalChain.from_llm(model, retriever=retriever)

8. Asking Questions

We can now ask questions about the Reddit source code. The answers provided by the Conversational Retrieval Chain are context-aware and directly based on the codebase.

questions = [ "Explain what each file does, at a high level",

"Explain how the files relate to each other", "<YOUR QUESTIONS HERE>"]

chat_history = []

for question in questions:

result = qa({"question": question, "chat_history": chat_history})

chat_history.append((question, result['answer']))

print(f"-> **Question**: {question} \n")

print(f"**Answer**: {result['answer']} \n")

Here were some of the responses I got:

**Answer**: Here's a high-level explanation of each file's purpose in the provided code:

1. `packages`: Defines the packages and dependencies required for the Reddit system.

2. `cookiehash`: Handles cookie hashing and related functionality for user authentication and session management.

3. `recommend`: Manages recommendation-related functionalities for users, such as learning from user preferences and classifying sites based on user preferences.

4. `frame`: Handles the framing of the Reddit web interface and related components.

5. `autocompute`: Helps with the automatic computation of certain values within the system.

6. `user-info`: Provides functionality related to user information, such as user paths and user tokens.

7. `web`: Manages the web-related functionalities and handlers for the Reddit system.

8. `data`: Handles data-related functionalities, including interactions with databases and data storage.

9. `view-defs`: Defines the views and related components for the Reddit web interface.

10. `mail`: Manages email-related functionalities, such as sending and receiving emails.

11. `util`: Provides utility functions and tools that are used across the system.

12. `search`: Handles search-related functionalities within the Reddit system.

13. `memcached`: Manages interactions with the Memcached caching system.

14. `crc`: Handles CRC (Cyclic Redundancy Check) functionalities for checking data integrity.

15. `rss`: Manages RSS (Really Simple Syndication) feed-related functionalities for the Reddit system.

16. `sites`: Handles site-related functionalities, such as site paths, site learning, and site classification.

17. `user-panel`: Manages the user panel component of the Reddit web interface.

Keep in mind that this is a high-level overview, and each file contains further details and specific implementations of the mentioned functionalities.

-> **Question**: Explain how the files relate to each other

**Answer**: In the provided code, there is a system definition for a project named "reddit." This system is defined using the Common Lisp library ASDF (Another System Definition Facility). The system has several components (files) and each component may have dependencies on other components.

Here's a brief summary of the components and their dependencies:

1. "packages" - Defines the packages used in the project. No dependencies.

2. "cookiehash" - Depends on "packages" and "data."

3. "recommend" - Depends on "packages" and "user-info."

4. "frame" - Depends on "packages" and "web."

5. "autocompute" - Depends on "packages."

6. "user-info" - Depends on "data" and "packages."

7. "web" - Depends on "packages," "mail," "recommend," "data," "util," "mail," "rss," "memcached," "sites," "view-defs," "user-info," and "cookiehash."

8. "data" - Depends on "packages," "view-defs," and "util."

9. "view-defs" - Depends on "packages."

10. "mail" - Depends on "packages."

11. "util" - Depends on "packages."

12. "search" - Depends on "packages."

13. "memcached" - Depends on "packages" and "crc."

14. "crc" - Depends on "packages."

15. "rss" - Depends on "memcached," "packages," and "sites."

16. "sites" - Depends on "packages," "data," "util," "search," "autocompute," and "user-info."

17. "mail" (duplicate) - Depends on "packages" and "data."

18. "user-panel" - Depends on "data," "packages," "web," and "sites."

Each file/component may contain functions, variables, and other code related to specific aspects of the project. The dependencies between these components indicate that a particular component relies on the code and functionality provided by the components it depends on. For example, the "recommend" component depends on "packages" and "user-info," meaning it uses some functions or variables from those components.

Remember that the code is released under the MIT License, which allows for usage, modification, distribution, and selling of the code under certain conditions, but it is provided "as is" without any warranty. What will you ask? What will you learn? Let me know!

Limitations

After talking with Shriram Krishnamurthi on Twitter, I realized I should point out that this approach has some limitations for understanding code.

- The analysis of this code may often be incomplete - and sometimes you will miss key details due to errors in this analysis that could send you down the wrong path.

- There may be some "pollution" of results, which can come when your LLM has knowledge of its training data with overlapping terms. For example, the concept of "Reddit Karma" is probably already stored in GPT-4's knowledge base, so asking it about how Karma works might lead it to pull context from its training data vs the supplied code.

You will need to exercise good judgment and take a trust-but-verify approach for this initial rough approach. Or, maybe you can take things further and build a better system!

Conclusion

Throughout this guide, we explored reverse engineering Reddit's public source code repository for version 1 of the site using LangChain. By leveraging AI capabilities, we save valuable time and effort, replacing manual code examination with automated query responses.

LangChain is a powerful tool that revolutionizes code understanding and generation. By using advanced models like VectorStores, Conversational RetrieverChain, and an LLM, LangChain empowers developers to efficiently analyze code repositories, provide context-aware answers, and generate new code.

LangChain's workflow involves indexing the codebase, embedding code snippets, processing user queries with language models, and utilizing the Conversational RetrieverChain to retrieve relevant code snippets. By customizing the retriever and building the Conversational Chain, developers can fine-tune the retrieval process for precise results.

By following the step-by-step guide, you can leverage LangChain to enhance your code comprehension, obtain context-aware answers, and even generate code snippets within GitHub repositories. LangChain opens up new possibilities for productivity and understanding. What will you build with it? Thanks for reading!

Subscribe or follow me on Twitter for more content like this!

Comments ()