Google's new LLM doctor is right way more often than a real doctor

The LLM's differential diagnosis list had the correct diagnosis 59% of the time, vs. 34% for human doctors.

Physicians face an immense challenge when evaluating patients with confusing constellations of symptoms and clinical findings. They must mentally generate a list of possible diagnoses that could explain the patient's presentation. This list, known as a differential diagnosis, provides a roadmap to guide further testing and treatment. But arriving at an accurate differential diagnosis list can be extraordinarily difficult, even for the most experienced doctors when dealing with complex, atypical cases.

Now, researchers at Google have developed a promising new AI system (paper here) that could aid physicians in this difficult task. The system is based on conversational large language models - a type of AI algorithm that has recently shown immense progress by being trained on massive textual datasets.

The Immense Challenge of Diagnosis in Medicine

Diagnosis sits at the very heart of a physician's role. Patients rely on doctors' expertise to correctly determine the underlying cause of their symptoms. But the process of generating an appropriate differential diagnosis list requires pulling from an extensive reservoir of medical knowledge. Doctors must mentally compile a list of possible diagnoses by matching the patient's symptoms, exam findings, and test results to diseases they have learned about.

This cognitive process resembles searching through an enormous library of diseases in your mind, trying to identify which volumes on the shelf best fit the details of the case at hand. The entire undertaking depends on the physician's medical knowledge and clinical acumen.

For straightforward presentations like a child with a fever and cough, creating a short differential diagnosis list is usually manageable. But doctors often face complex cases with unclear presentations, especially when patients have multiple comorbidities. These types of diagnostic challenges stretch the limits of even seasoned physicians' knowledge and pattern recognition abilities.

When faced with perplexing cases, doctors can struggle to produce a sufficiently broad and accurate differential diagnosis list. However, developing a comprehensive differential is crucial. If the correct diagnosis is absent from the initial list, it may never be discovered or only found after extensive, costly, and sometimes invasive testing. An inaccurate differential diagnosis can lead to delayed treatment, patient harm, and wasted healthcare resources.

Subscribe or follow me on Twitter for more content like this!

The Promise of Large Language Models for Clinical Reasoning

We all know LLMs are getting more powerful (maybe due to task contamination, see my last post on that one). LLMs are trained by exposing them to colossal datasets of natural text from books, websites, and other sources. They develop statistical representations that allow them to understand and generate new text.

Researchers have found that fine-tuning LLMs on domain-specific datasets - like medical text - enables them to acquire knowledge and capabilities useful for particular applications. For instance, LLMs fine-tuned on medical data have shown promising performance at medical question-answering and information retrieval tasks.

This progress raised the possibility that properly designed LLMs could assist physicians with clinical reasoning tasks like generating differential diagnoses. However, it was unknown if LLMs could perform well on real-world diagnostic challenges, rather than just standardized medical exams.

Testing an LLM's Diagnostic Abilities on Complex Case Reports

To investigate, researchers at Google and DeepMind developed an LLM optimized specifically for clinical diagnostic reasoning, the "LLM for DDx." They fine-tuned a large general-purpose LLM on datasets of medical dialogues, clinical notes, and question-answering examples.

The researchers then tested the LLM for DDx, called Articulate Medical Intelligence Explorer (AMIE) in the research post, on 302 real-world case reports from the New England Journal of Medicine (NEJM). These NEJM case reports are widely known to be extremely complex diagnostic challenges.

Each case report details the clinical history, exam findings, and initial tests results of a real patient with an ultimately confirmed but initially perplexing diagnosis. The case details are written specifically to enable expert clinicians reading the journal to logically reason their way to the diagnosis so they can learn from it.

To focus the LLM, it was provided with just the initial clinical details from each report. The confirmed final diagnosis and expert analysis was withheld. The LLM had to logically analyze the details and provide a ranked list of possible diagnoses to explain the case based solely on the limited information given.

The AI Performed Surprisingly Well as a Diagnostician

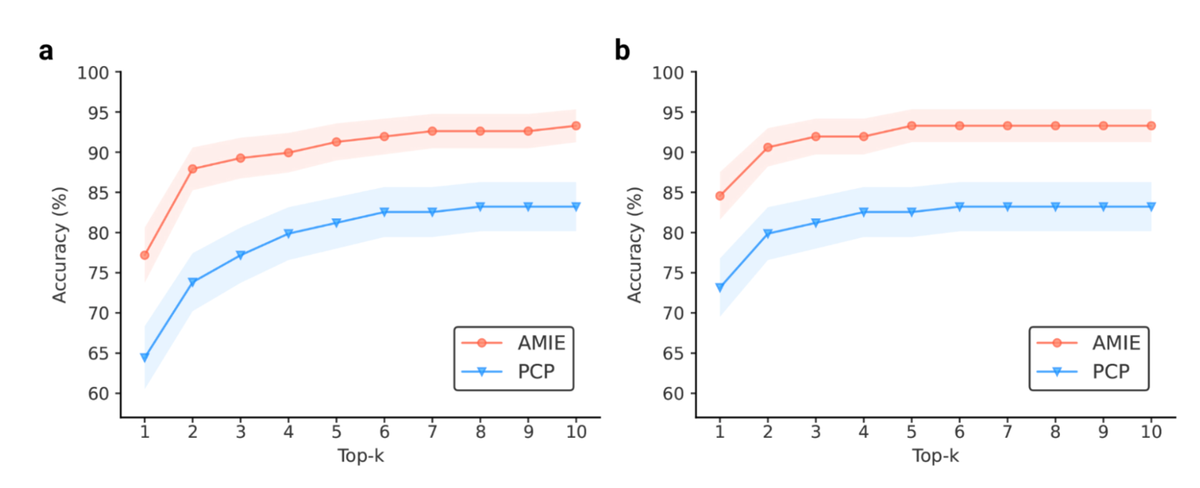

The LLM's differential diagnosis lists included the final confirmed diagnosis in the top 10 possibilities in 59% of cases.

To provide a comparison, the researchers had experienced board-certified physicians form differential diagnosis lists for the same cases, without any assistance. The doctors included the right diagnosis in the top 10 only 34% of the time, significantly worse than the LLM.

The quality of the LLM's differential diagnosis lists were also rated by senior medical specialists. Across all 302 cases, they rated the LLM's lists as significantly more appropriate and comprehensive than those created by unassisted physicians.

These results demonstrate that fine-tuned LLMs have a substantial ability to logically reason through complex medical cases and generate quality differential diagnoses, even matching or exceeding human doctors.

Going further: LLMs as a physician's sidekick

Having shown the promise of LLMs at diagnostic reasoning independently, the researchers also evaluated the LLM's ability to serve as an interactive assistant to physicians.

Could querying the LLM allow doctors to produce better differential diagnoses themselves, compared to using conventional information sources?

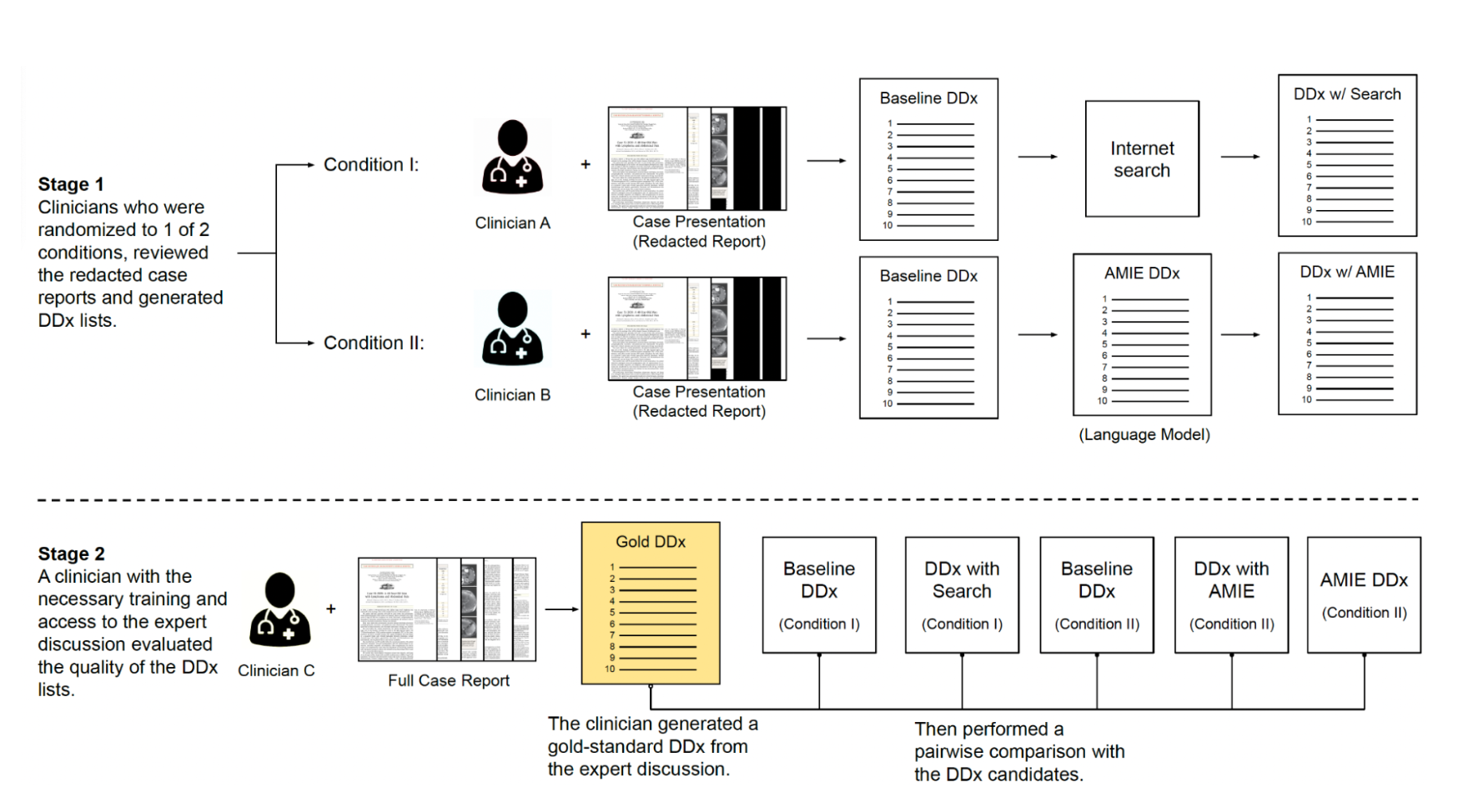

To find out, the researchers had general physicians diagnose 100 of the complex NEJM cases in two different scenarios:

- Doctors formed an initial differential diagnosis list without assistance. Then they did it again but were allowed to use online search tools and medical resources.

- Doctors again created an initial list without help. But this time they could query the LLM for assistance in generating the second list.

The physicians had access to the LLM through an interactive conversational interface. They could ask the LLM questions about the case details and leverage its responses.

With the LLM's assistance, the doctors created differential diagnosis lists that contained the correct diagnosis 52% of the time, compared to only 36% with search tools. Independent medical specialists also rated their lists as more appropriate and comprehensive when the LLM was available.

The physicians found querying the AI just as efficient as searching online resources. But it improved their diagnostic accuracy.

Limitations

This research demonstrates the enormous potential of LLMs to enhance physicians' clinical reasoning abilities. The LLM system showed it could logically analyze challenging cases and generate quality differential diagnoses independently. It also improved doctors' diagnostic accuracy when used as an interactive assistant.

However, the authors rightly note that rigorous real-world testing is still needed before any LLM system is ready for actual use in healthcare settings. The study design differed in important ways from clinical reality.

LLMs still have weaknesses in handling rare diseases and logically combining findings. There are also essential considerations around issues like system safety, fairness, and transparency that must be addressed before LLMs can be responsibly deployed in medicine.

Regardless, this work takes an important step forward in assessing LLMs' aptitude for the critical task of clinical diagnosis. It also provides an evaluation framework for the rigorous testing of AI systems' reasoning abilities on standardized medical cases.

As researchers continue to refine LLMs' knowledge and skills, studies like this suggest they may one day act as helpful assistants that allow doctors to quickly generate thorough differential diagnoses. Such tools could improve the quality, timeliness, and equity of care. But responsible development and testing will be critical to ensure these systems are safe and truly enhance clinical practice.

Subscribe or follow me on Twitter for more content like this!

Comments ()