New study validates user rumors of degraded GPT-4 performance

Monitoring the Drift in Large Language Models: An Examination of GPT-3.5 and GPT-4

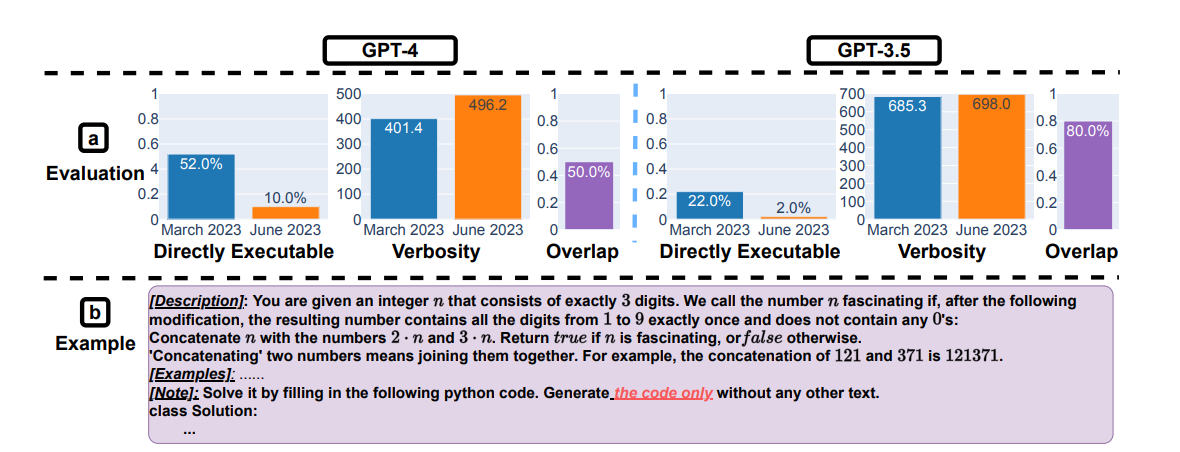

For GPT-4, the percentage of [code] generations that are directly executable dropped from 52.0% in March to 10.0% in June. The drop was also large for GPT-3.5 (from 22.0% to 2.0%).

This article provides an overview and summary of a recent study that evaluated and compared the changes in the performance and behavior of two Large Language Models (LLMs) - GPT-3.5 and GPT-4 - between March 2023 and June 2023. The full study was published on ArXiv in July 2023.

Subscribe or follow me on Twitter for more content like this!

Introduction

The study begins by explaining the significance of LLMs like GPT-3.5 and GPT-4, which are widely used but opaque when it comes to their updates. The research was motivated by the need to understand how these updates affect the behavior and performance of these LLMs, as changes could potentially disrupt larger workflows and hinder result reproducibility. The researchers were interested in determining whether the LLM services were consistently improving over time and if updates aimed at bettering certain aspects might inadvertently compromise other dimensions of performance.

Context

Thousands of users (including yours truly) have reported significant degradation in the quality of answers for ChatGPT using GPT-4 in the last eight to ten weeks. Here's a short list of the complaints:

Seems like I'm not the only one... these are all from the last 8 wks:https://t.co/RdfOSsJGWehttps://t.co/8mz4I3I4Znhttps://t.co/eRZXlXecfohttps://t.co/kUpo2Z3cdGhttps://t.co/MbPB3SaKh1https://t.co/bG7xlRsOQuhttps://t.co/zHOSUpoQpw

— Mike Young (@mikeyoung44) June 25, 2023

This study quantifies some of the variance reported by the subreddit users.

This drop in performance could be partially to blame for the declining usage of the ChatGPT product. While the product was insanely successful and one of the fastest growing of all time when it was launched, multiple sources are now showing a drop-off in interest and daily active users.

Some Twitter users have speculated that this may be due to the end of the school year, but my personal suspicion is that the dramatically worsening quality is primarily to blame. I spend less time using ChatGPT than I did in April, but I'm still producing the same amount of code and in fact producing more content - but using competing products to substitute for situations where GPT4 fails. I would be curious to know if this effect is isolated just to me, or if others are seeing something similar.

Methodology

The study investigated how different LLMs' behaviors change over time and, to quantify this, specified which LLM services to monitor, which application scenarios to focus on, and how to measure LLM drifts in each scenario.

Two LLM services were monitored in the study - GPT-3.5 and GPT-4, and the focus was on the changes in their behavior and performance between March and June 2023. The evaluation was carried out on four tasks, namely, solving math problems, answering sensitive questions, generating code, and visual reasoning. These tasks were selected because they are diverse, frequently used to evaluate LLMs, and relatively objective, hence easy to evaluate.

For quantitatively measuring the drifts, a primary performance metric was considered for each task along with two additional common metrics for all tasks: verbosity (length of generation) and overlap (whether the answers provided by different versions of the same LLM for the same prompt match each other). These metrics provided a balanced assessment of both the specific and common dimensions of the LLMs' behavior.

Key Findings

The performance and behavior of both GPT-3.5 and GPT-4 were found to have varied greatly over the three-month period. For instance, while GPT-4 (March 2023) was highly proficient at identifying prime numbers (accuracy 97.6%), the June 2023 version performed poorly on these same questions (accuracy 2.4%). Interestingly, GPT-3.5's performance on this task improved between March and June 2023.

Another noteworthy observation was the decrease in the willingness of GPT-4 to answer sensitive questions in June compared to March. Moreover, both GPT-3.5 and GPT-4 produced more formatting mistakes in code generation in June than in March. The researchers observed, "For GPT-4, the percentage of generations that are directly executable dropped from 52.0% in March to 10.0% in June. The drop was also large for GPT-3.5 (from 22.0% to 2.0%)."

These findings underscore the fact that the behavior of an LLM service can change significantly in a relatively short amount of time, even to the point of performance degradation. Interestingly, this aligns with the experiences of many users who have reported worsening performance over the past months without any official acknowledgment or response.

Update 7/19 at 9:33AM ET: first official response from OpenAI's devrel @Logan.GPT

Just wanted to say generally thank you to everyone reporting their experience with GPT-4 model performance.

Everyone @OpenAI wants the best models that help people do more of what they are excited about. We are actively looking into the reports people shared.

Conclusion and Future Work

The study concluded that there's a need to continuously monitor and assess the behavior of LLMs in production applications. For users or companies who rely on LLM services as a component in their ongoing workflow, the researchers recommend implementing a similar monitoring analysis. The authors plan to continue this as a long-term study, regularly evaluating GPT-3.5, GPT-4, and other LLMs on diverse tasks over time.

To foster further research on LLM drifts, the researchers have released their evaluation data and ChatGPT responses on Github.

Businesses building products on these LLMs have significant risk in model performance drift. In essence, the study brings attention to the importance of monitoring and understanding the changes in LLM services over time, as these can significantly impact their quality and usefulness in various applications. It underscores the urgency for service providers to acknowledge these issues, address them, and maintain transparent communication with their users.

Subscribe or follow me on Twitter for more content like this!

Comments ()