Turning images into 3D models in minutes, not hours

A new method that extracts accurate and editable meshes from 3D Gaussian Splatting representations within minutes on a single GPU

Researchers from LIGM laboratory in France have developed a novel technique for quickly creating high-fidelity 3D mesh models from collections of images captured around real-world scenes (project site). Their method, called SuGaR, enables detailed triangle mesh models to be reconstructed in minutes by employing neural representation and computational geometry techniques in a unique fashion. This capability can provide creators, educators, and professionals a radically more accessible pathway to leveraging 3D models across many applications.

In this article, we'll take a look at what made their approach unique and what it means for other AI projects. Let's begin!

Subscribe or follow me on Twitter for more content like this!

The Challenge of 3D Reconstruction

Reconstructing accurate 3D models of real environments has long been an arduous undertaking, requiring specialized equipment, carefully orchestrated capture processes, and extensive manual post-processing. Laser scanning rigs and structured light depth cameras can directly capture geometric scans but remain slow, expensive, and unwieldy.

Photogrammetry methods based on Structure from Motion generate sparse 3D point clouds from camera images, but producing clean, detailed surface models from those point clouds alone has proven remarkably difficult. And while impressive in quality, state-of-the-art neural radiance fields require rendering-intensive optimization cycles lasting hours or days even on modern GPUs to convert their volumetric scene representations into usable surface meshes.

So while many downstream use cases in simulation, education, digitization, and creative media stand to benefit tremendously from accessible high-quality 3D scene representations, the capture and development barriers have remained frustratingly high for most people.

Novel Combination of Techniques

This paper introduces the SuGaR method. The SuGaR method combines emerging neural scene representation with established computational geometry algorithms to overcome these challenges and provide a uniquely fast and accessible 3D modeling pathway.

The technique builds on a recent neural particle-based scene representation method called 3D Gaussian Splatting. By optimizing the orientations, dimensions, emissions, and other properties millions of tiny 3D Gaussian primitives to best reproduce a set of input camera images, Gaussian Splatting can reconstruct vivid neural renderings of a scene in minutes.

However, the independent Gaussian particles remain unstructured after optimization. The key innovation from SuGaR is introducing a new training process that encourages the particles to conform to surfaces while preserving detail. This alignment allows treating the particles like a structured point cloud for reconstruction.

Leveraging this point structure, SuGaR then executes a computational technique called Poisson Surface Reconstruction to efficiently generate a mesh directly from the aligned particles. Handling millions of particles simultaneously results in a detailed, triangulated model that conventional techniques would struggle with.

In essence, SuGaR moves the bulk of computational load into a fast, scalable up-front point cloud structuring. This shifts the required rendering-intensive workload away from final mesh generation to make fast model construction possible.

Validating Effectiveness

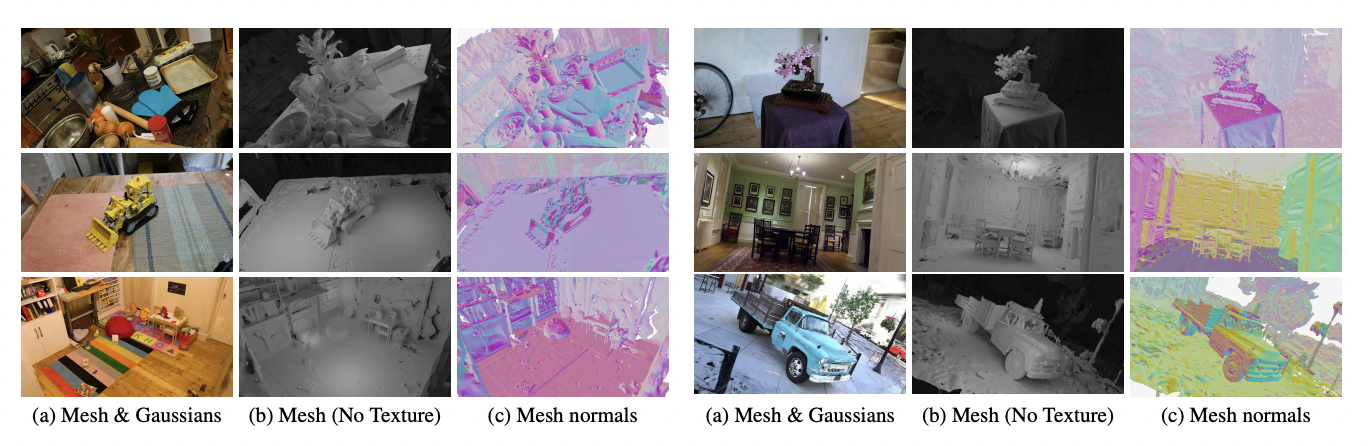

The researchers experimentally demonstrated the effectiveness of SuGaR for quickly building high quality models across extensive public datasets featuring indoor scenes, outdoor landscapes, detailed structures, specularity, lighting variations and other modeling challenges.

Examples include reconstructing detailed meshes for architectural models of complex indoor environments from the Mip-NeRF360 dataset and generating vivid meshes of structures like vehicles and buildings from the Tank & Temples dataset.

Quantitative and qualitative comparisons to existing state-of-the-art neural and hybrid reconstruction techniques showed SuGaR provides significantly faster mesh creation with rendering quality and geometric accuracy competitive with methods requiring orders of magnitude more computation. The authors state: "Our method is much faster at retrieveing a 3D mesh from 3D Gaussian Splatting, which is itself much faster than NeRFs. As our experiments show, our rendering done by bounding gaussians to the mesh results in higher quality than previous solutions based on meshes."

The authors also highlight the speed of their process: "Retrieving such an editable mesh for realistic rendering is done within minutes with our method, compared to hours with the state-of-the-art method on SDFs, while providing a better rendering quality."

Conclusion

The SuGaR technique significantly improves 3D model reconstruction. Traditional methods like laser scanning are costly and complex, and while neural radiance fields are high-quality, they're slow and resource-intensive. SuGaR changes this by combining neural scene representation with computational geometry. It starts with a method called 3D Gaussian Splatting to create a neural rendering, and then it aligns these particles to act like a structured point cloud. This is key for the next step: using Poisson Surface Reconstruction to turn these particles into a detailed mesh. This process is faster because it moves the heavy computation to the beginning.

SuGaR has been tested on various datasets, handling different challenges like indoor and outdoor scenes. It's not just faster than NeRFs, but it also maintains high quality and accuracy. This makes creating detailed 3D models quicker and more accessible, which is a big deal for fields like simulation, education, and media.

Subscribe or follow me on Twitter for more content like this!

Comments ()