Meta unveils Emu Edit: Precise image editing via text instructions

Existing systems struggle to interpret edit instructions correctly. Emu Edit tackles this through multi-task training.

Artificial intelligence has made incredible strides in recent years at generating and modifying visual content. But a persistent challenge has been enabling AI systems to precisely edit images based on free-form instructions from users. Now, researchers from Meta's AI lab have developed a novel AI system called Emu Edit (project page, paper) that makes significant progress on this problem through multi-task learning. Their work represents a major advance for instruction-based image editing. Let's see what the model can do and how it works!

Subscribe or follow me on Twitter for more content like this!

The Context: AI-Powered Image Editing

Image editing is a ubiquitous task in today's world, used by professionals and amateurs alike. However, conventional editing tools like Photoshop require significant expertise and time investment to master. AI-based editing holds the promise of enabling casual users to make sophisticated image edits using natural language.

Early AI editing systems allowed users to tweak specific parameters like image brightness or apply predefined filters. More recent generative AI models such as DALL-E and Stable Diffusion take a text prompt and generate an entirely new image from scratch.

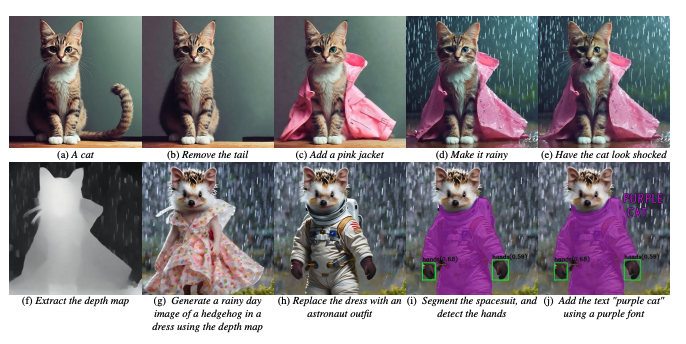

Instruction-based editing combines these approaches - users provide an input image and edit instructions in text, and the AI modifies the original image accordingly. This allows for more precise, customizable edits. For instance, a user could input a photo of their cat and instruct the AI to "Add a pink bow to the cat's collar."

The Difficulty of Interpreting Edit Instructions

A major obstacle faced by instruction-based editing systems is accurately interpreting free-form natural language edit instructions. Humans excel at this due to our innate language understanding and world knowledge. But for AI systems, mapping text to precise visual edits is hugely challenging.

They must identify which objects or regions in the image to modify based on the instruction text. Then make appropriate changes while preserving unrelated sections. Current systems struggle with these capabilities, often misunderstanding instructions or making haphazard edits.

Developing AI that can follow edit instructions as adeptly as humans requires innovations in model architecture, training techniques, and benchmark tasks. This is the gap Emu Edit aims to close.

Emu Edit: A Multi-Task Approach

Emu Edit employs multi-task learning to train a single model capable of diverse image editing and computer vision tasks. This represents a departure from prior work that focused on individual tasks like object removal or color editing.

Specifically, the researchers compiled a dataset covering 16 distinct tasks grouped into three categories:

- Region-based editing - Tasks like adding, removing, or substituting objects and changing textures.

- Free-form editing - Global style changes and text editing.

- Vision tasks - Object detection, segmentation, depth estimation, etc.

Formulating all tasks, including vision ones, as generative image editing enabled unified training. The researchers customized the data generation process for each task to ensure diversity and precision.

This multi-task approach provides two key advantages. First, training on vision tasks improves the model's recognition abilities - critical for accurate region-based edits. Second, it exposes the model to a wide range of image transformations beyond just editing.

Guiding the Model with Task Embeddings

A model trained on such diverse tasks needs to infer which type of editing the instruction requires. To enable this, Emu Edit utilizes learned "task embeddings".

For each task, the researchers train an embedding vector that encodes the task identity. During training, the task embedding is provided to the model and optimized jointly with the model weights.

At inference time, a text classifier predicts the most appropriate task embedding based on the instruction. The embedding guides the model to apply the correct type of transformation - like a "texture change" vs. "object removal".

Without this conditioning, Emu Edit struggled to choose suitable edits for ambiguous instructions like "Change the sky to be gray" which could involve texture or global edits.

Results: Significant Improvements in Editing Fidelity

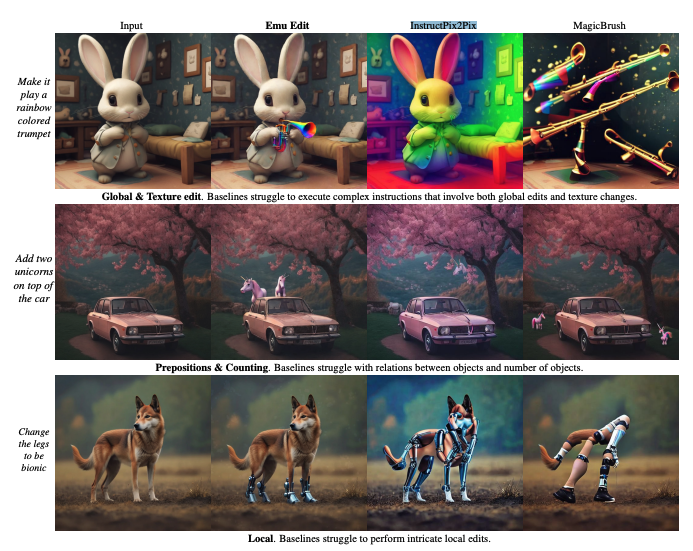

The researchers rigorously evaluated Emu Edit against prior instruction-based editing systems like InstructPix2Pix. For quantitative metrics, they measured the faithfulness of the edits to the instruction text and preservation of unrelated image regions.

Emu Edit achieved state-of-the-art performance on these automated metrics across a range of editing tasks. The authors also released a benchmark that covers seven different image editing tasks.

Ablation studies validated that the multi-task training with vision and editing tasks improved performance on region-based edits. And the task embeddings were critical for following instructions accurately.

Furthermore, with just a few examples Emu Edit could adapt to wholly new tasks like image inpainting via "task inversion" - updating just the embedding rather than retraining the entire model.

Key Takeaways and Limitations

Emu Edit represents a significant advance in building AI systems that can precisely interpret and execute natural language image edit instructions. But there is still room for progress. It sometimes struggles with highly complex instructions and could benefit from tighter integration with large language models. Regardless, Emu Edit provides a strong foundation to build upon using multi-task learning.

Overall, by training on a diverse mix of tasks with task-specific conditioning, Emu Edit demonstrates that AI editing abilities can begin to approach human levels. This brings us one step closer to making sophisticated image editing accessible to all through natural language.

Subscribe or follow me on Twitter for more content like this!

Comments ()