Marrying Pixel and Latent Diffusion Models for Efficient and High-Quality Text-to-Video Generation

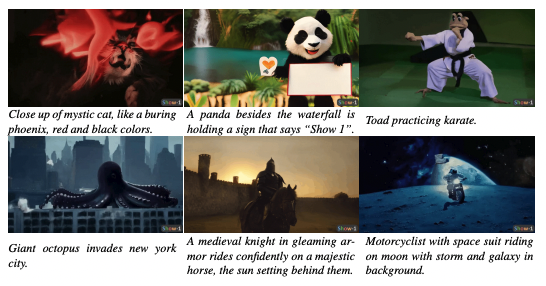

A new paper proposes Show-1, a hybrid model that combines pixel and latent diffusion for efficient high-quality text-to-video generation.

Generating convincing, high-fidelity videos from textual descriptions is an exciting frontier in artificial intelligence research. However, existing techniques for text-to-video generation face difficult tradeoffs between alignment accuracy, visual quality, and computational efficiency. In a new paper (project page link here), researchers from the National University of Singapore propose an innovative hybrid approach called Show-1 that combines pixel-based and latent diffusion models to achieve the best of both worlds.

Subscribe or follow me on Twitter for more content like this!

The Challenge of Text-to-Video Generation

Transforming abstract concepts and sparse word descriptions into rich, realistic videos is an extremely challenging undertaking. To generate coherent video frames that smoothly transition, a model needs temporal understanding. The generated clips should also seamlessly align with all the visual details specified in the text prompt. And of course, the final videos must exhibit photorealistic fidelity.

On top of these technical challenges, text-to-video models must also be efficient enough to deploy at scale. Generating high-resolution video requires immense computational resources. So reducing memory usage and inference time is critical for practical applications.

Recent text-to-video models based solely on pixel or latent diffusion models have not been able to fully solve these multifaceted challenges simultaneously. There are inherent tradeoffs between different priorities. What are they?

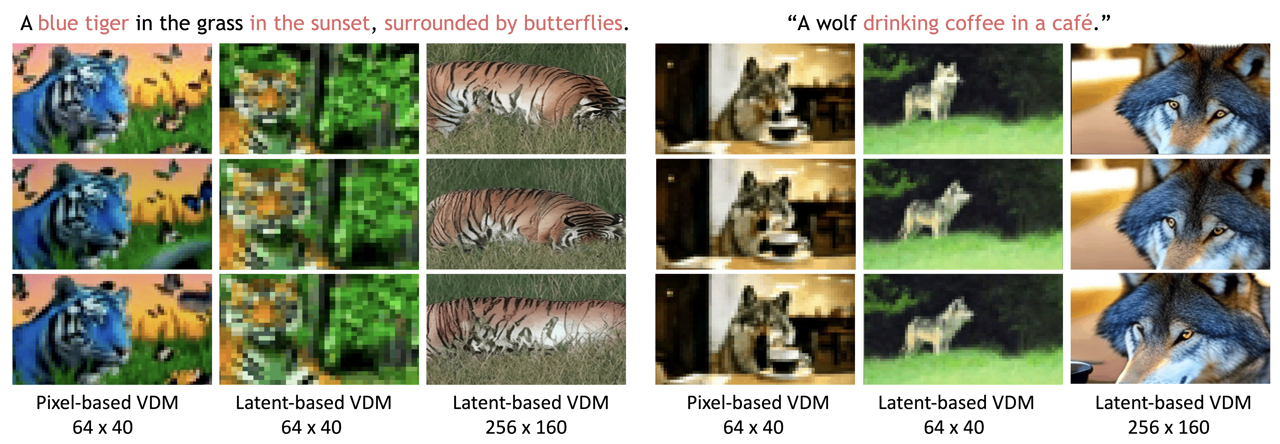

Pixel Models: Precision Alignment But Computational Cost

Pixel-based diffusion models directly operate on the raw pixel values of images. By working directly in the pixel space, these models can precisely correlate visual features with keywords in text descriptions. This leads to very strong synchronization between generated videos and textual prompts.

However, processing high-resolution videos in the pixel domain requires huge amounts of compute and memory. For example, the state-of-the-art Imagen Video model demands extensive resources to generate 1-minute 480p videos after training on 128 TPU cores for 3 days.

So while pixel models achieve excellent text-video alignment, their computational requirements limit practical applications.

Latent Models: Efficient But Poorer Alignment

Latent diffusion models reduce video data into a compact latent representation before processing. This compressed domain enables very efficient generation of high-resolution videos with less compute.

However, the tradeoff is that compressing videos into a small latent space discards many fine visual details needed to match text descriptions. Latent models struggle to retain the full semantic alignment of pixel models in their low-dimensional latent embeddings.

So latent diffusion models are fast and require fewer resources, but the videos they generate tend to deviate from the descriptive text prompts.

Best of Both Worlds: A Hybrid Approach

Given the complementary strengths and weaknesses of pixel and latent models, researchers from NUS wondered if combining both techniques could inherit the advantages of each. Their proposed Show-1 model does exactly that through a mixed approach.

Pixel Diffusion for Low-Resolution Foundation

Show-1 first utilizes a pixel-based diffusion model to generate a low-resolution video keyframe sequence. Operating directly on pixel values at low-res allows preserving semantic alignment without heavy compute requirements.

The researchers found pixel models are especially adept at producing low-res videos with accurate text matching. This establishes a strong visual foundation aligned to the text prompt.

Latent Diffusion for Efficient Super-Resolution

Next, Show-1 feeds the low-res video into a latent diffusion model for upsampling. Interestingly, while latent models struggle with text-video alignment when generating videos independently, they actually excel at super-resolution: enhancing resolution while retaining the original visuals.

By providing the pixel model's video as context, the latent model learns to function as an efficient super-resolution expert. It upsamples the low-res video to high-res, enriching detail while preserving the aligned semantics from the pixel model.

Unified Model Achieves Precision and Efficiency

By chaining the pixel model's alignment capabilities with the latent model's super-resolution prowess, Show-1 achieves unprecedented text-video fidelity with high computational efficiency.

Compared to solely pixel or latent models, Show-1 requires 15x less GPU memory while generating videos that match text prompts more accurately. This hybrid approach captures the synergistic strengths of both diffusion techniques in one unified framework.

Conclusion: Blending Complementary Techniques to Raise the Bar

The Show-1 model demonstrates how thoughtfully combining complementary methodologies can overcome the limitations of individual techniques. While pixel and latent models make certain tradeoffs, the researchers show that chaining these models together can inherit the advantages of each.

Beyond text-to-video generation, the concepts behind Show-1 can provide guidance for improving other multimedia tasks. Exploring how to creatively blend pixel, latent, and other diffusion techniques opens new frontiers for building more powerful and efficient generative models.

I am still eager to see a long-form text-to-video model (one that can produce more than a few seconds of good footage). Hopefully, this gets us a bit closer to that dream!

Subscribe or follow me on Twitter for more content like this!

Comments ()