Combining Thermodynamics and Diffusion Models for Collision-Free Robot Motion Planning

A new AI-based method to help robots navigate 3D environments

Researchers from Yonsei University and UC Berkeley recently published a paper describing an innovative artificial intelligence technique for improving robot navigation in complex, unpredictable environments. Their method leverages a tailored diffusion model to generate collision-free motion plans from visual inputs. This approach aims to address long-standing challenges in deploying autonomous robots in unstructured real-world spaces. Let's see what they found, and assess how generalizable these findings will be to fields like warehouse robotics, manufacturing, and self-driving cars.

Subscribe now for just $5 to receive a weekly summary of the top AI and machine learning papers, delivered directly to your inbox.

The Persistent Obstacles to Practical Robot Navigation

Enabling nimble and reliable robot navigation in unconstrained spaces remains an elusive grand challenge in the field of robotics. Robots hold immense potential to take on dangerous, dull, dirty, and inaccessible tasks in domains like warehouses, mines, oil rigs, surveillance, emergency response, and ... elder care. However, for autonomous navigation to become practical and scalable, robots must competently handle changing environments cluttered with static and dynamic obstacles.

Several stubborn challenges have impeded progress:

- Mapping Requirements - Most navigation solutions demand an accurate 2D or 3D map of the environment beforehand. This largely constrains robots to narrow industrial settings with fixed infrastructure. Pre-mapping and localization are infeasible in highly dynamic spaces.

- Brittleness to Unexpected Obstacles - Robots struggle to respond in real-time to novel obstacles and changes in mapped environments. Even small deviations can lead to catastrophic failures.

- Goal Observability - Determining if target destinations are reachable is difficult without exhaustive real-time sensing of all surrounding obstacles. Robots easily get trapped oscillating between blocked goals and obstacles.

- Reliance on Expensive Sensors - LIDAR, depth cameras, and other sensors are often necessary during deployment for collision avoidance, driving up costs. Visual cameras alone are affordable but provide limited 3D information.

These realities have severely limited the feasibility of robot autonomy in small spaces or safety-critical applications. New techniques are needed to address the issues above with minimal sensing requirements.

Diffusion Models - A Promising AI Approach for Robotic Control

In recent years, AI researchers have increasingly explored using diffusion models for generating robotic behaviors and control policies. Diffusion models are probabilistic deep neural networks trained to reverse the effects of noise injection on data. They can generate diverse solutions for reaching goals - whether realistic images, coherent text, or robot motions.

These models present several properties that could prove useful:

- Multimodality - Ability to produce varied outputs and goals from the same input, choosing whichever is feasible.

- Guided Generation - Goals and motions can be guided toward desirable targets and distributions.

- Training Efficiency - Avoid explicitly modeling all environment physics and dynamics like simulators.

- Stochasticity - Noisy outputs can better avoid local minima and overfitting.

Researchers have begun applying diffusion models to robotic manipulation and navigation tasks with promising initial results. This motivated the UC Berkeley and Yonsei teams to explore new ways to improve navigation robustness specifically.

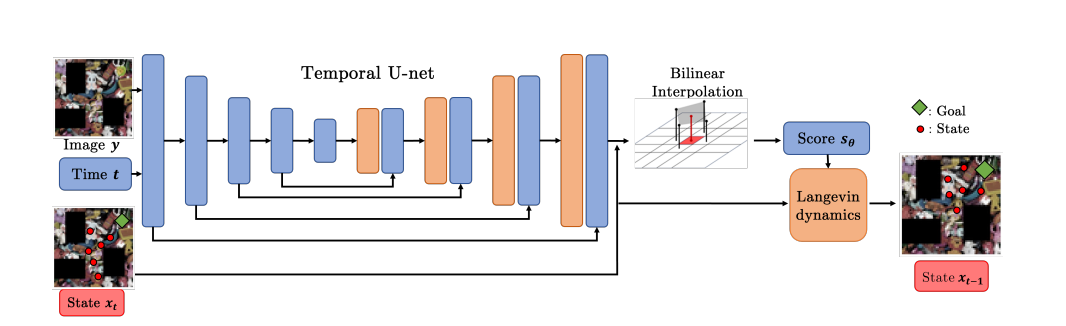

A Custom Collision-Avoiding Diffusion Model for Navigation

The key innovation proposed in this paper is a novel diffusion model tailored for collision-free robot navigation from only visual inputs. The researchers accomplished this by:

- Designing a specialized collision-avoiding diffusion kernel that mimics how heat disperses around insulating obstacles. This learns motions that avoid collisions.

- Training the model end-to-end to take a visual map and simultaneously generate reachable goals along with viable motion plans.

- Requiring only a static visual map at inference time. No other sensing needed for obstacles.

This approach could bypass the need for expensive LIDAR and depth sensing. It also avoids explicit programming of navigation heuristics. The model learns these simply from map data.

They drew inspiration from prior works like Urain et al. that used diffusion models for navigation but required real-time depth inputs. Their method needs only visual maps, enabling lower-cost robots.

Experiments Demonstrate Potential Capabilities

The researchers tested their approach extensively in a simple 2D simulation environment with randomly generated maps containing insurmountable obstacles. Across 100 episodes with different maps, their model achieved:

- 98-100% success in navigating to feasible goals while avoiding collisions.

- Consistently lower divergence between generated and true goal distributions compared to baselines.

- Strong performance in multi-modal scenarios with multiple possible goals. The model learned to ignore unrealistic, blocked goals.

The proposed model significantly outperformed traditional behavior cloning and unmodified diffusion models. For example, a standard diffusion model collided with obstacles 34% of the time.

These results suggest their method can smoothly navigate cluttered spaces while only selecting achievable goals accessible to the robot. This is a promising capability for real-world application.

Limitations and Need for Real-World Validation

While these initial simulations showcase capabilities on simplified 2D maps, many open questions remain regarding real-world efficacy:

- How the approach will scale to complex 3D environments like cities, forests, or disaster sites is unclear.

- Simulated dynamics may not capture unpredictable real-world interactions, motions, and sensor noise.

- No physical robot tests were performed, limiting validity. Simulation-to-reality gaps are common in robotics.

- Neural networks can make unreliable mistakes when deployed outside their training domain.

To make a compelling case for practical usage, the researchers will need to replicate these results on physical robot platforms in varied real-world environments. Extensive testing is critical to identify failure cases and improve safety. Computational performance metrics should also be benchmarked.

An Encouraging Step Towards Practical Robot Autonomy

This research presents an encouraging step towards enabling ubiquitous robot navigation in unstructured spaces. The novel concepts of a collision-avoiding diffusion model and heat dispersion-inspired kernel exhibit intriguing potential (I really like using heat dispersion as an inspiration - very clever). If the approach can be proven through rigorous real-world validation, it could help overcome fundamental roadblocks in deploying economical, competent mobile robots.

However, as is common in AI and robotics research, I think substantial gaps remain between simulated demonstrations and real-world mastery. I'll be eagerly watching for follow-up work evolving and validating this method on physical systems.

Subscribe now for just $5 to receive a weekly summary of the top AI and machine learning papers, delivered directly to your inbox.

Comments ()