PubDef: Defending Against Transfer Attacks Using Public Models

Adversarial attacks pose a serious threat to ML models. But most proposed defenses hurt performance on clean data too much to be practical.

Adversarial attacks pose a serious threat to the reliability and security of machine learning systems. By making small perturbations to inputs, attackers can cause models to produce completely incorrect outputs. Defending against these attacks is an active area of research, but most proposed defenses have major drawbacks.

This paper (repo here) from researchers at UC Berkeley introduces a new defense called PubDef that makes some progress on this issue. PubDef achieves much higher robustness against a realistic class of attacks while maintaining accuracy on clean inputs. This post explains the context of the research, how PubDef works, its results, and its limitations. Let's go.

Subscribe or follow me on Twitter for more content like this!

The Adversarial Threat Landscape

Many types of adversarial attacks have been studied. The most common is white-box attacks. Here the adversary has full access to the model's parameters and architecture. This lets them compute gradients to precisely craft inputs that cause misclassifications. Defenses like adversarial training have been proposed, but they degrade performance on clean inputs too much.

Transfer attacks are more realistic. The attacker uses an accessible surrogate model to craft adversarial examples. They hope these transfer and also fool the victim model. Transfer attacks are easy to execute and don't require any access to the victim model.

Query-based attacks make repeated queries to the model to infer its decision boundaries. Defenses exist to detect and limit these attacks by monitoring usage.

Overall, transfer attacks are very plausible in practice but are not addressed by typical defenses like adversarial training or systems that limit queries.

A Game Theory Viewpoint

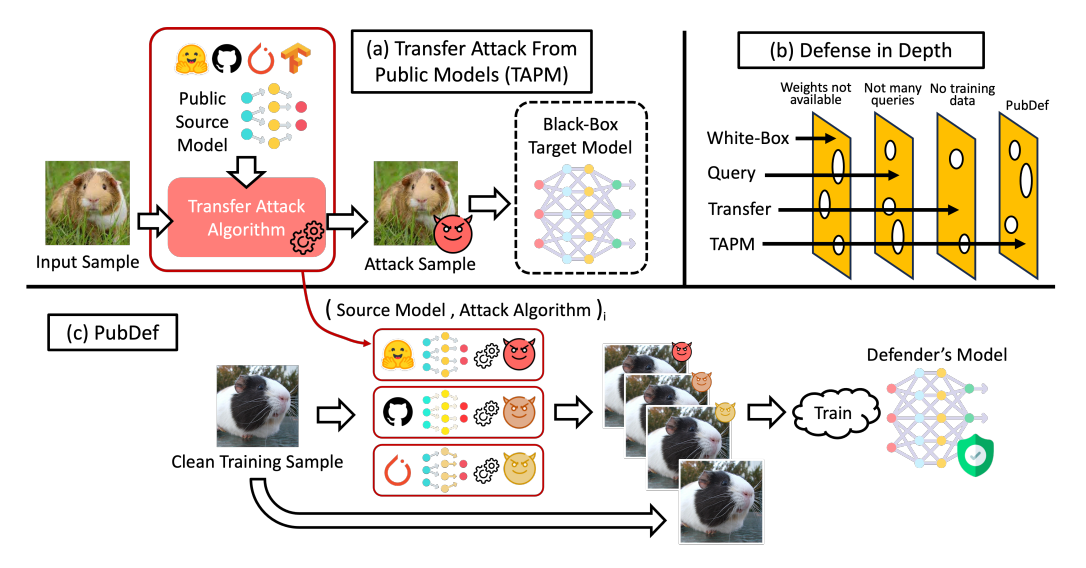

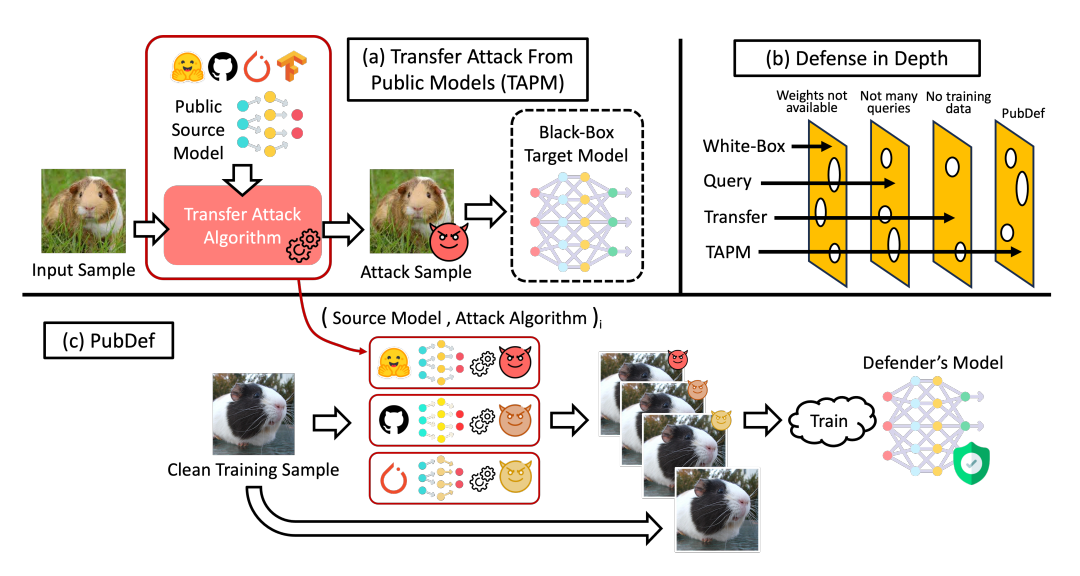

PubDef is designed specifically to resist transfer attacks from publicly available models. The authors formulate the interaction between attacker and defender as a game:

- The attacker's strategy is to pick a public source model and attack algorithm to craft adversarial examples.

- The defender's strategy is choosing parameters for the model to make it robust.

Game theory provides tools to reason about optimal strategies. Importantly, the defender can train against attacks from multiple source models simultaneously. This ensemble-like approach makes the model robust to diverse attacks.

How PubDef Works

PubDef trains models by:

- Selecting a diverse set of publicly available source models

- Using a training loss that minimizes error against transfer attacks from these source models

This adversarial training process tunes the model to resist the specific threat model of transfers from public sources.

The training loss dynamically weights terms based on the current error rate against each attack. This focuses training on the most effective attacks.

The source models are chosen to cover diverse training methods: standard, adversarial, corruption robust, etc. This provides broad coverage against unknown attacks.

Experimental Results

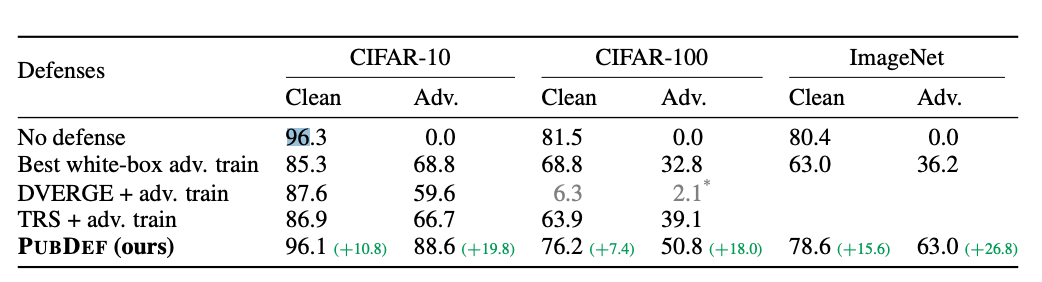

The authors evaluated PubDef against 264 different transfer attacks on CIFAR-10, CIFAR-100, and ImageNet datasets.

The results show PubDef significantly outperforms prior defenses like adversarial training:

- On CIFAR-10, PubDef achieved 89% accuracy vs 69% for adversarial training.

- On CIFAR-100, 51% vs 33% accuracy

- On ImageNet, 62% vs 36% accuracy

Remarkably, PubDef achieves this improvement with almost no drop in accuracy on clean inputs:

- On CIFAR-10, accuracy only dropped from 96.3% to 96.1%

- On CIFAR-100, 82% to 76%

- On ImageNet, 80% to 79%

So compared to adversarial training, PubDef provides substantially better robustness with a much smaller impact on performance on unperturbed data.

Limitations and Future Work

PubDef focuses specifically on transfer attacks from public models. It does not address other threats like white-box attacks. Some other limitations:

- Relies on model secrecy

- Can be circumvented by training a private surrogate model

- Requires other defenses against query-based attacks

Within its intended scope, PubDef provides a pragmatic defense that aligns with realistic attacker capabilities. But further work is needed to handle other threats and relax reliance on secrecy.

Overall this work makes significant progress towards deployable defenses. By targeting a reasonable threat model, the gains in robustness come almost for free with minimal accuracy loss. These ideas will hopefully spur further research leading to even more effective and practical defenses.

Conclusion

Adversarial attacks present a pressing challenge for deploying reliable machine learning systems. While many defenses have been proposed, few have provided substantial gains in robustness without also degrading performance on clean inputs.

PubDef represents a promising step towards developing defenses that can be practically deployed in real-world systems. There is still work to be done in handling other types of attacks and reducing reliance on model secrecy. However, the techniques presented here - modeling interactions through game theory, training against diverse threats, and focusing on feasible attacks - provide a blueprint for further progress.

Adversarial attacks will likely remain an issue for machine learning security. As models continue permeating critical domains like healthcare, finance, and transportation, the need for effective defenses becomes even more pressing. PubDef demonstrates that by targeting defenses to align with realistic threats, substantial gains in robustness are possible without prohibitive trade-offs. Developing pragmatic defenses that impose minimal additional costs represents the most viable path towards safe and reliable deployment of machine learning.

Subscribe or follow me on Twitter for more content like this!

Comments ()