DeepMind's X-Embodiment and RT-X: Training Robots to Learn from Each Other

Just as people acquire new skills by observing others, robots also benefit from pooling knowledge

Robotics has made tremendous strides in recent years. However, most robots today remain specialized tools, focused on single tasks in structured environments. This makes them inflexible - unable to adapt to new situations or generalize their skills to different settings. To achieve more human-like intelligence, robots need to become better learners. Just as people can acquire new skills by observing others, robots may also benefit from pooling knowledge across platforms.

A new paper from researchers at Google DeepMind, UC Berkeley, Stanford, and other institutions demonstrates how multi-robot learning could enable more capable and generalizable robotic systems. Their paper introduces two key resources to facilitate research in this area:

- The Open X-Embodiment dataset: A large-scale dataset containing over 1 million video examples of 22 different robots performing a diverse range of manipulation skills.

- RT-X models: Transformer-based neural networks trained on this multi-robot data that exhibit positive transfer - performing better on novel tasks compared to models trained on individual robots.

Subscribe or follow me on Twitter for more content like this!

The Promise of Multi-Robot Learning

The standard approach in robotics is to train models independently for each task and platform. This leads to fragmentation - models that do not share knowledge or benefit from each other's experience. It also limits generalization, as models overfit to narrow domains.

The Open X-Embodiment dataset provides a way to overcome this, by pooling data across research labs, robots, and skills. The diversity of data requires models to learn more broadly applicable representations. Further, by training a single model on data from different embodiments, knowledge can be transferred across robots through a shared feature space.

This multi-robot learning approach is akin to pre-training in computer vision and NLP, where models like BERT leverage large corpora of unlabeled data before fine-tuning on downstream tasks. The researchers hope to bring similar benefits - increased sample efficiency, generalization, and transfer learning - to robotics through scale and diversity of data.

The Open X-Embodiment Dataset

The dataset contains over 1 million episodes of robotic manipulation demos, recorded from 22 different robot platforms across 21 institutions worldwide. This covers a diverse range of robots, including various robot arms, bi-manual robots, mobiles robots, and quadrupeds.

The data comprises 60 existing robotics datasets that were unified into a common format using RLDS. Each example consists of synchronized video, actions, and task descriptions. The visual observations capture the robot's point-of-view during completion of skills like grasping, placing, pushing, and wiping.

In total, the dataset demonstrates 527 unique skills, with each robot performing between 82 to 369 skills. This enables models to learn a wide repertoire of robotic capabilities from the aggregated data.

RT-X Models

To benchmark multi-robot learning on their dataset, the researchers train RT-X - Transformer-based models adapted from prior work (RT-1 and RT-2). The core RT-X architecture consists of:

- Computer vision backbone (EfficientNet) to process input images

- Natural language module to encode textual instructions

- Transformer layers to fuse vision and language

- Output heads for predicting robotic actions

Two RT-X variants are evaluated:

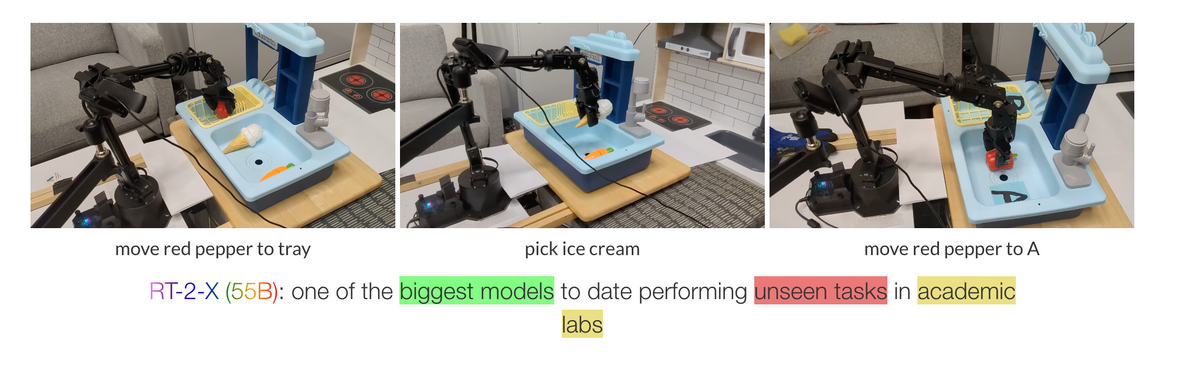

- RT-1-X: A 35M parameter model based on the RT-1 architecture.

- RT-2-X: A 55B parameter VLM-based model extending RT-2.

Both models are trained to take in third-person camera images and textual task descriptions, and output actions for controlling the robot arm. This leverages the multi-modal nature of the Open X-Embodiment data.

Results: Positive Transfer from Multi-Robot Learning

The central result is that RT-X models trained on diverse data significantly outperform prior single-robot models:

- 50% higher success rate on average vs. individual dataset baselines

- 3X better performance on emergent spatial reasoning skills

This suggests that multi-robot learning provides a better foundation, enabling positive transfer of knowledge to novel tasks and embodiments.

Further ablation studies analyze the impact of model scale, training techniques, and dataset composition. Key findings:

- Large models better utilize diverse data vs. underfitting smaller models

- Web pre-training improves generalization

- Differences in dataset mixing affect transferability

Overall, the results substantiate the potential of multi-robot learning as a paradigm. Harnessing the breadth of the community's data amplifies sample efficiency and capability generalization.

Limitations and Impact

The scope of this initial study is limited. All experiments involve only robotic arms in controlled lab settings. Before real-world viability, further work is needed:

- Testing on more diverse robot types and modalities

- Expanding beyond tabletop manipulation

- Benchmarking sim2real transfer

Nonetheless, it provides a proof-of-concept and tools for the community to build upon. The release of the large-scale Open X-Embodiment dataset and RT-X models lowers the barrier for further research into multi-robot learning.

Looking ahead, successfully translating these methods to real-world applications would be a sea change. Rather than isolated systems, pools of shared data could produce robots capable of rapidly acquiring new skills as needed - much as humans cross-pollinate knowledge through culture and education. Further research in this vein, conducted openly and responsibly, presents a promising path toward more general robot intelligence.

Subscribe or follow me on Twitter for more content like this!

Comments ()