Contrastive Decoding: A Promising New Technique for Boosting Reasoning in Large Language Models

How using an "expert" and an "amateur" LLM can improve performance with the contrastive decoding method.

Artificial intelligence researchers are constantly seeking new ways to improve the reasoning capabilities of large language models (LLMs) like GPT-3/4 and PaLM. One promising new technique called Contrastive Decoding has shown remarkable potential for boosting performance on reasoning tasks without requiring any additional training.

In this post, we'll dig into how Contrastive Decoding works, the key technical findings from initial experiments, and why this approach could be a game-changer for strengthening neural network reasoning. We'll summarize and speculate on the paper published on Arxiv, which you can read here.

Subscribe or follow me on Twitter for more content like this!

The Limitations of Today's LLMs

LLMs like GPT-3, PaLM, and LaMDA have demonstrated incredibly human-like abilities for natural language generation. Feed them a few sentences of prompt, and they can produce paragraphs or even pages of astonishingly coherent text. However, despite their eloquence, these models still stumble when it comes to logical reasoning. For example, you could give one of these models a word problem about calculating percentages or unit conversion, and it might make silly arithmetic mistakes or fail to show its work. Solving math word problems reliably remains an elusive challenge for LLMs.

The broader field of AI has coined the term "System 1" thinking to characterize the intuitive abilities of neural networks like LLMs. Their pattern recognition prowess enables fluent generation of text, translation between languages, and even creation of music or code. However, "System 2" thinking - which encompasses deliberate logical reasoning - remains a key weakness. Improving systematic reasoning is critical for LLMs to robustly solve challenges like mathematical word problems, debugging code, making trustworthy predictions about the future, or advising about high-stakes decisions.

How Contrastive Decoding Boosts Reasoning

Contrastive Decoding is a clever technique proposed by researchers at Anthropic and UC San Diego for accentuating the reasoning capabilities of LLMs. Here's how it works at a high level:

- You utilize two models - a stronger "expert" LLM and a weaker "amateur" LLM

- When generating text, Contrastive Decoding favors outputs where the expert model assigns a much higher likelihood than the amateur

- This accentuates whatever skills the expert model has learned beyond the amateur model

For example, consider a math word problem. The amateur model might assign high likelihood to simple but incorrect solutions, taking shortcuts instead of showing step-by-step work. But the expert model will assign those solutions a lower likelihood, penalizing the shortcuts. By contrasting the expert and amateur, Contrastive Decoding reduces the chances of selecting those shortcut solutions.

You can think of the amateur model like a teacher who makes common mistakes, while the expert model acts as the sterling tutor identifying those errors. Contrastive Decoding uses their disagreement to robustly identify stronger reasoning chains.

Key Technical Findings

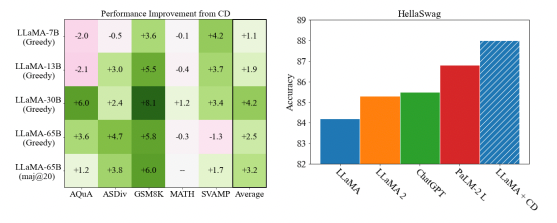

The researchers validated Contrastive Decoding on a diverse set of reasoning tasks spanning mathematical word problems, common sense reasoning, and logical inference challenges. Some of the key findings:

- On mathematical word problem benchmarks, Contrastive Decoding improved accuracy by anywhere from 3 to 8 percentage points. For instance, it enabled a 7 billion parameter LLM to outperform a 12 billion parameter LLM without contrastive decoding on algebra word problems.

- Contrastive Decoding achieved state-of-the-art results on the difficult HellaSwag benchmark, surpassing models like GPT-3.5 and PaLM 2-Large which boast over 10X more parameters.

- Detailed analysis suggested that Contrastive Decoding reduces "shortcuts" like verbatim copying from the input text. This forces models to show more systematic logical work in their step-by-step reasoning.

- Taking a "majority vote" across multiple solutions generated for the same problem becomes more accurate under Contrastive Decoding. This demonstrates improved consistency.

- The choice of amateur model proved important. A low-parameter or partially-trained model performed best as the amateur. Using a negative prompt did not work as well as a full but lower-skilled model.

The researchers also found Contrastive Decoding to increase computational efficiency. The small amateur model adds minimal overhead, while providing sizable accuracy gains over alternatives like generating multiple solutions and taking a majority vote.

Why This Matters for the Future of AI

Contrastive Decoding offers an enticing path forward for strengthening neural network reasoning, without requiring any extra training. The fact that it improved performance across diverse reasoning domains highlights its potential as a general technique. Early results are promising, but plenty of open research remains to build upon this foundation.

This approach could enable models to learn from fewer examples, make better use of available training data, and more clearly exhibit expert-level mastery within their capabilities. Such efficiency and skill could hasten progress on challenges like achieving robust, human-level reasoning. And Contrastive Decoding provides a framework for accentuating model strengths using only unlabeled data - a tantalizing possibility.

Of course, many caveats apply. Contrastive Decoding does not instantly solve all reasoning challenges, and it may not readily apply to domains with sparse feedback like planning for complex real-world scenarios. But its generality makes it well suited for continued investigation. This technique demonstrates that with the right generation guidance, today's LLMs may intrinsically harbor more systematic reasoning potential than their typical output reveals. Contrastive Decoding and related ideas could light the path ahead for the next generation of radically capable AI systems.

Subscribe or follow me on Twitter for more content like this!

Comments ()