Can Large Language Models Self-Correct Their Own Reasoning? Probably Not.

A new paper takes a critical look at the promise and limits of self-correction

The rapid advancement of large language models (LLMs) like GPT-3, PaLM, and ChatGPT has sparked tremendous excitement about their ability to understand and reason about the world. Some believe these models may even be able to refine and improve their own reasoning through a process called "self-correction." But can LLMs actually correct their own mistakes and flawed reasoning?

A new paper by researchers at Google DeepMind and the University of Illinois meticulously examines this tantalizing possibility, assessing whether self-correction can enhance reasoning capabilities in the current generation of LLMs. Let's see what they found out.

Subscribe or follow me on Twitter for more content like this!

Diving Deep into Self-Correction

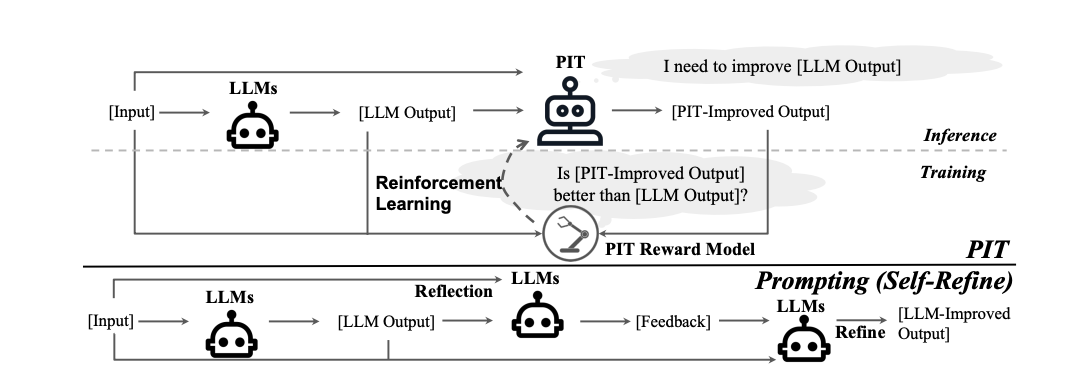

At its core, self-correction involves an LLM reviewing its own responses, identifying problems or errors, and revising its answers accordingly. The paper focuses specifically on "intrinsic self-correction," where models attempt to fix mistakes without any external feedback or assistance. This setting is crucial because high-quality, task-specific feedback is frequently unavailable in real-world applications.

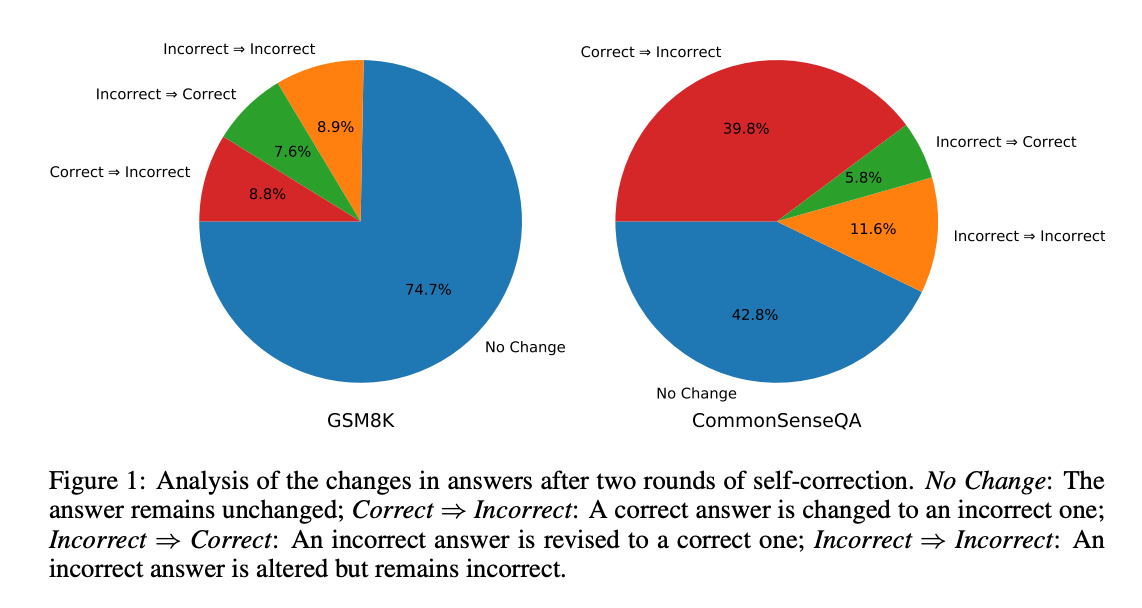

The researchers conduct extensive experiments across diverse reasoning tasks including mathematical word problems, common sense reasoning, and open-domain question answering datasets. Disappointingly, the results reveal current LLMs struggle to self-correct; in fact, their performance often deteriorates after attempting correction.

On a set of grade school math word problems (GSM8K), the massive GPT-3 model sees its accuracy drop slightly from 76% down to 75% post self-correction. Rather than improving, it got a little worse!

The analysis illuminates why - LLMs rarely recognize flaws in their initial reasoning. At best, they leave their answers unchanged. But more concerning, they may even alter initially correct responses to become incorrect after self-correction. The fundamental issue is LLMs have difficulty reliably assessing the correctness of their own reasoning and answers on these tasks. Anyone who's spent much time with ChatGPT is familiar with it apologizing for "mistakes" even when correct.

The paper also investigates more sophisticated self-correction techniques involving critique and debate between multiple LLM instances. What were the findings for the more sophisticated techniques and what were they?

The paper examined multi-agent debate, where multiple LLM instances critique each other's responses, as a potential self-correction technique. This was tested on the GSM8K mathematical reasoning dataset. The multi-agent debate approach used 3 agents and 2 rounds of debate. With this setup, it achieved 83.2% accuracy on GSM8K.

For comparison, the paper also tested a simpler self-consistency method, where multiple independent responses are generated and majority voting used to select the final answer. With 3 responses, self-consistency achieves 82.5% accuracy on GSM8K. With 6 responses, it reaches 85.3% accuracy.

So with an equivalent number of responses, the multi-agent debate accuracy is only slightly better than self-consistency (83.2% vs 82.5%). And with more responses, self-consistency significantly outperforms multi-agent debate. The paper concludes that the observed improvements are not attributable to "self-correction", but rather the self-consistency obtained across multiple generations.

Across the board, the empirical results demonstrate current LLMs lack competence for robust intrinsic self-correction of reasoning. On their own, they cannot meaningfully improve flawed reasoning or avoid unforced errors.

Nuanced Insights on Self-Correction's Promise and Pitfalls

While the limitations are clear, the paper offers thoughtful discussion on when self-correction can still be beneficial. It emphasizes that self-correction should not be dismissed entirely but rather approached with realistic expectations.

When the goal is aligning LLM responses with particular preferences - like making them safer or more polite - self-correction has shown promise. The key differences from reasoning tasks are that 1) LLMs can more easily judge if a response meets the criteria; and 2) the feedback provides concrete guidance for improvement.

(Note: I also published a summary of an article on training using preference data vs RLHF, which you might find interesting. Link is here)

The authors also examine the differences between pre-hoc and post-hoc prompting. Pre-hoc involves encoding requirements like including certain words directly in the initial prompt. Post-hoc uses self-correction feedback to incorporate such directives. While self-correction is more flexible, pre-hoc prompting is substantially more efficient as long as criteria can be specified upfront.

Finally, the researchers suggest promising directions like leveraging external feedback to enhance reasoning. High-quality feedback from humans, training data, and tools may provide the supervision LLMs need to critique and amend their flawed responses.

Key Takeaways on the True Capabilities of Self-Correction

Stepping back, I think the main takeaways from this paper are:

- Self-correction should not be oversold as a cure-all for deficiencies in LLM reasoning - significant limitations exist presently.

- It shows the most promise on tasks where LLMs can judge response quality on concrete criteria.

- For reasoning tasks, the inability to reliably assess correctness hinders intrinsic self-correction.

- Techniques incorporating external guidance are probably needed to improve reasoning abilities.

- Focus more on enhancing initial prompts than relying on post-hoc self-correction.

- Self-consistency (generating multiple independent responses) is a strong baseline.

- Feedback from humans, training data, and tools is still crucial for genuine reasoning improvements.

Conclusion

In summary, I think this paper is a little bit of a downer, but that's how research goes sometimes. While promising for many applications, intrinsic self-correction appears inadequate for enhancing reasoning capabilities with current LLMs. But maybe as models continue to evolve, self-correction may one day emerge as a vital tool for creating more accurate, reliable, and trustworthy AI systems. We'll have to keep a close eye on this facet of AI development until then!

Subscribe or follow me on Twitter for more content like this!

Comments ()