AI can (kinda) generate novel ideas

LLMs have some brainstorming limitations.

Can AI generate research ideas that rival those of human researchers?

A recent study by researchers at Stanford took a serious look at this, asking whether AI can move beyond just being a tool and actually contribute novel ideas to the creative process.

By comparing ideas generated by an LLM to those written by over 100 NLP researchers, they wanted to see if AI could keep up with human experts in coming up with novel, high-quality research ideas.

In this post, we’ll break down the study’s design, its findings, and what they mean for the future of research. By the end, you'll have a clearer picture of whether AI can match or even surpass human creativity when it comes to generating new ideas.

Why does this matter?

LLMs have already shown they can write, code, and even generate mathematical proofs. But generating research ideas requires creativity, knowledge of existing work, and the ability to identify open questions in a field. If AI can do this, it changes how we think about the role of machines in scientific discovery.

The researchers wanted to know if AI could take on the first and often most creative step in research: generating ideas that could lead to meaningful projects. Could AI think outside the box and come up with ideas that match or exceed the creativity of human researchers?

How the study was conducted

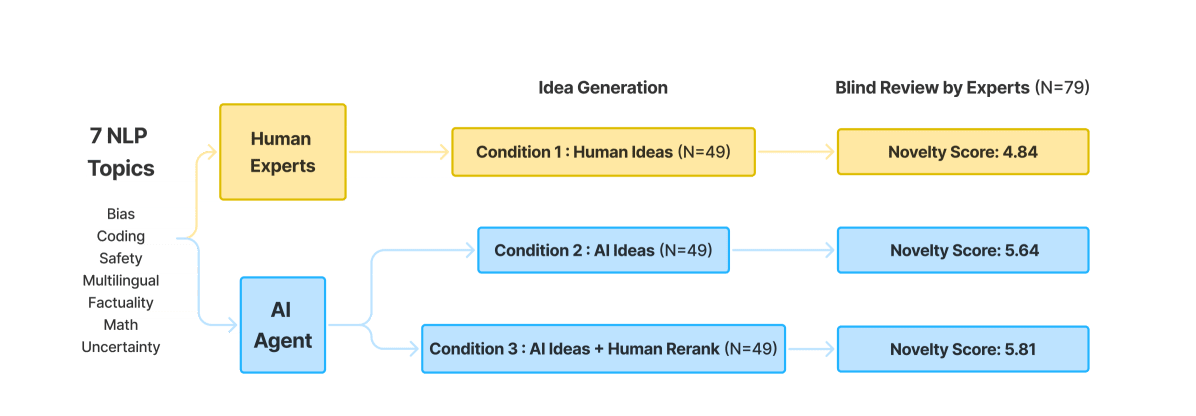

The study’s design (more info here) was simple but thorough. Over 100 NLP experts were recruited to take part in two ways: some generated research ideas, while others acted as blind reviewers, assessing both AI- and human-generated ideas. This allowed a direct comparison of the quality of ideas from both groups.

Here’s how it worked:

- Human-Generated Ideas: NLP researchers wrote original research ideas on specific topics.

- AI-Generated Ideas: An LLM, enhanced with retrieval capabilities, generated ideas on the same topics.

- AI Ideas Re-Ranked by Humans: In some cases, human experts re-ranked the AI ideas to ensure the best ones were being compared with human ideas.

To avoid bias, all ideas were anonymized and formatting standardized, so reviewers didn’t know if they were evaluating ideas from a human or an AI. Reviewers rated the ideas based on four criteria:

- Novelty: How original was the idea?

- Excitement: Did the idea seem promising or intriguing? (Somewhat arbitrary of course).

- Feasibility: Could the idea realistically be pursued?

- Effectiveness: How impactful could the idea be for the field?

Study results

The main takeaway was this: AI ideas were novel but often impractical or duplicative.

Short story long: AI-generated ideas were consistently rated as more novel than those from human researchers. This means AI came up with more original ideas, statistically speaking.

But there were trade-offs…

- Feasibility: AI ideas were often less feasible, lacking detailed steps or relying on unrealistic assumptions.

- Excitement: AI-generated ideas didn’t always generate more excitement unless humans re-ranked them. When humans fine-tuned the AI ideas, excitement scores improved.

- Overall Effectiveness: When humans re-ranked the AI ideas, they outperformed both AI-generated and human-generated ideas in terms of effectiveness.

This suggests that a collaboration between AI and humans could lead to stronger ideas overall than either working alone.

How ideas were evaluated

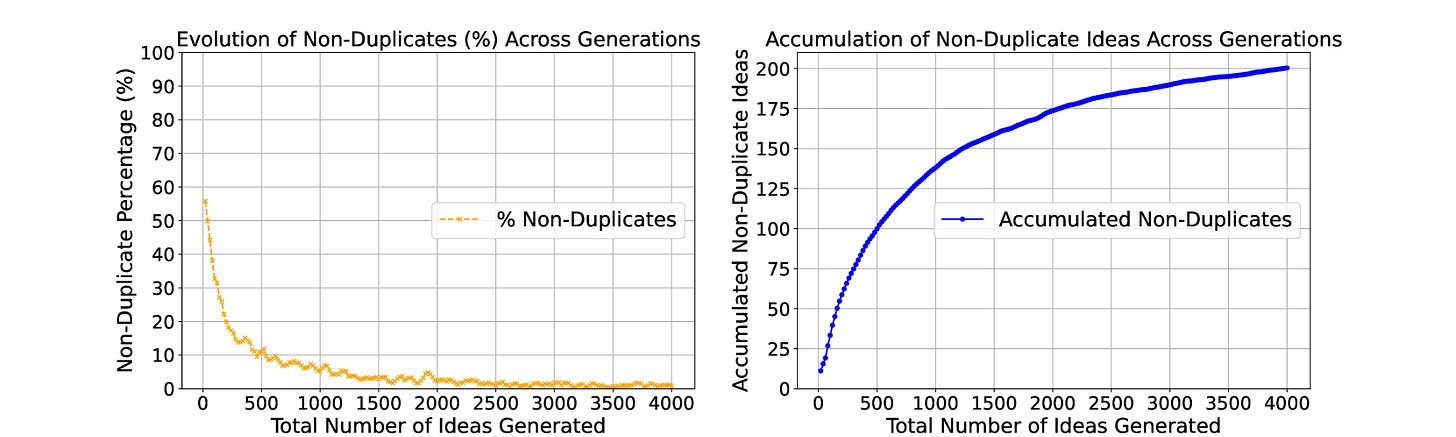

The study took careful measures to ensure fairness in the evaluation process. All submissions were anonymized, so reviewers couldn’t tell if an idea came from an AI or a human. To make sure the AI-generated ideas were based on solid knowledge, the LLM used retrieval-augmented generation (RAG), allowing it to pull in relevant research papers. This gave the AI a grounding in the current state of the field. It also generated a large number of ideas (over 4,000 per topic), which were then ranked to highlight the best ones.

However, the AI had some limitations. One issue was idea duplication—many of the AI’s ideas were slight variations of each other (boo). In fact, only about 5% of the generated ideas were truly unique (!), which shows a challenge in producing a diverse range of ideas at scale.

My thoughts

We can think of this study as basically a giant brainstorming session where both humans and an AI are coming up with ideas. The humans propose practical, well-considered ideas based on their experience, while the AI throws out lots of new, sometimes wild ideas that no one else thought of. The problem? Many of the AI’s ideas are impractical, duplicative, and lack the details needed to make them work.

That’s essentially what this study found. AI can offer fresh, creative ideas, but it often misses the mark on practicality. I think those of us who have used AI as a coding assistant recognize this problem.

The researchers did a rigorous analysis of the results. Here are the key findings:

- AI-generated ideas were significantly more novel than those from humans, even when controlling for biases.

- AI ideas often scored lower on feasibility, with the reviewers noting the lack of practical steps.

- Out of 4,000 AI-generated ideas per topic, only about 200 were considered truly unique, highlighting the AI's challenge in producing a wide range of ideas. This sucks but I guess if you generate enough ideas, quantity has a quality of its own.

AI as creative partner

So, can AI replace humans in generating research ideas? Not yet. This study shows that AI can assist in the early stages of research by offering novel perspectives, but it still needs humans to refine and develop these ideas. Using AI as a tool rather than a solution is something that big influencers on Twitter like Ethan Mollick believe (and I believe it too), so this is some additional evidence that this is the correct way to conceptualize LLMs.

The most promising outcome of the study was the improvement seen when AI and humans worked together. AI can generate a high volume of creative ideas, and humans can filter and develop the most promising ones. This suggests that the future of AI in research will likely be collaborative, with AI helping to spark new ideas that humans turn into real projects.

What’s next?

This study proves that AI can generate creative, novel ideas, but it also highlights the limits of relying on AI alone for research ideation. AI is great at thinking outside the box, but it still struggles with the practical details needed to make its ideas actionable.

Going forward, the challenge will be integrating AI into the research process in a way that plays to its strengths while addressing its weaknesses. AI probably will not replace humans’ capacity to brainstorm anytime soon, but it can certainly be a valuable tool to enhance and add to human creativity.

As AI keeps improving, the big question we’ve asked ourselves in almost every paper breakdown we’ve looked at is: how can we best combine human and machine intelligence to push the boundaries of discovery? Let me know what you think in the Discord!

Comments ()